AI Agents Protocol War: Who’s Winning Between Agents2Agents and MCP?

Agents2Agents and MCP battle for dominance in AI agent protocols. Discover who’s leading the race in intercommunication and innovation.

4/29/2025

artificial intelligence

16 mins

Realizing the potential of AI agents, businesses are now more focused on introducing innovations that push the boundaries of AI agents to drive business benefits. This increased interest in AI agents fueled the race between tech giants to introduce their AI agent protocols.

By implementing AI agents in the solution, industries have been able to experience improved responses with better accuracy, ultimately enhancing business growth. AI agents have proved themselves as reliable agent that can think, make decisions, and do tasks for you with minimal human involvement. But with increasing expectations, we need solutions in which multiple AI agents work in collaboration, for which there should be some common rules that they all must follow.

Considering this requirement, researchers and developers joined hands to introduce AI agent protocols. Through our article, we will help you in making your understanding about AI agents' Protocols, and inform you whether Agents2Agents or MCP is leading the AI agent protocol race.

What is the AI agent Protocol?

“An AI agent protocol is a particular collection of rules, standards, or policies that helps the AI agents to communicate with each other under a multi-agent environment. By following such protocols, AI agents can effectively exchange information, assign tasks, and coordinate to ensure the smooth execution of actions. This helps in providing a strong foundation to AI agents' environments as these protocols enable smooth automated operation of these real-world complex tasks.” (D. Weyns et al., 2004)

Why are industries showing interest in the AI agents Protocol?

Industries are now showing keen interest in developing their own AI agent protocols to have greater control to customize how agents can interact with one another. With such protocols, it ensures seamless integration with existing tools, enhances security and protection, along with allowing fine-tuning tune their agent's behaviour according to their specific use case. Furthermore, by having such defined standards, businesses can have a developer ecosystem for their platform, strengthening adoption and user retention. As stated in the words of Andrew Ng(Founder & CEO of DeepLearning.AI):

"To truly harness the power of AI, industries must develop their own protocols that enable seamless integration, robust security, and tailored agent behavior. This not only empowers businesses to innovate with precision but also fosters a vibrant developer ecosystem that drives adoption and long-term value."

This highlights the importance of having an AI agent protocol, as this helps in empowering businesses to introduce innovations with precision, ensuring their growth.

What is Model Context Protocol(MCP)?

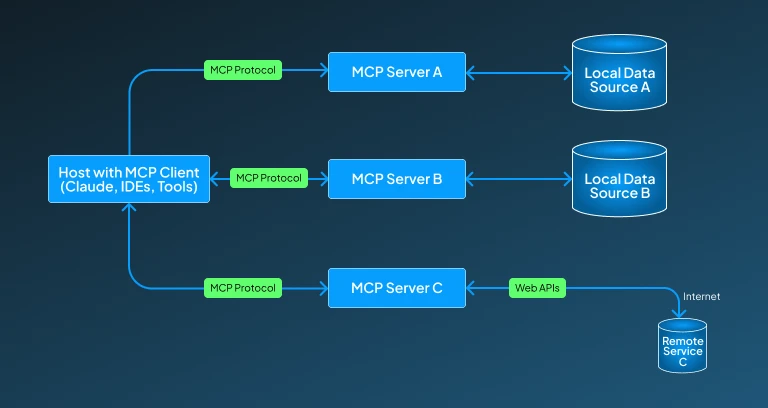

Model Context Protocol(MCP) is an open protocol built by Anthropic to help LLM-driven applications interact with external tools and data sources. This enables a standard interface for AI agents to access and use resources like files, APIs, and databases, eventually uplifting their capabilities to perform tasks. We can facilitate structured communication between AI models and the external environment through protocols like these, which will address the challenges related to integrating AI tools with diverse data, promoting smarter and scalable AI deployments.

How does MCP work?

MCP follows a client-server architecture at its core, where AI agents function as clients and the environment acts as the server. Here, the agents continuously send instructions to the environment that execute the particular instruction, while executing the instruction, it also sends back some responses in the form of observations. This protocol for interaction enables the execution of a more structured, reliable, and repeatable workflow.

Structured Input/Output

MCP ensures a structured communication between the agent and the environment, which is organized in a JSON schema. This structure incorporates the following components:

- Action Requests: These are the requests or instructions sent by the agents to the server.

- Observations: These are the information sent back from the server to the agents.

- Metadata: This contains the task identifiers, time, results, etc.

By involving the structured approach in its protocol, this MCP can easily understand both sides, reducing the chances of ambiguity and enabling easier debugging and logging.

Observation-Action Loop

MCP involves a continuous feedback loop, which functions until the desired requirement is met or the assigned task is completed. The feedback loop has the following core components that contribute to the execution of tasks:

- Observation:

For capturing an observation, the server captures the current state of the environment in which it is integrated (e.g., UI screenshot, DOM structure, or app state).

- Action Decision:

The next important element in the feedback loop is an agent (client) analyzing the observation provided by the server to decide the best response. (e.g., “click this button,” or “type this text”).

- Execution:

After analyzing, the server executes the action in the actual interface.

- New Observation:

Right after the execution of an action is completed, then its instantly provides a new observation is instantly provided.

This feedback loop continues until the agent fulfills the assigned task or reaches an endpoint.

Stateless, Deterministic Design

The MCP AI agent protocol is stateless, which indicates that each of the individual request and response pairs is independent of others, and ultimately, the system doesn't rely on the memory of previous steps. This also makes this protocol deterministic at the same time, as for the same input, it provides an output, which enables convenience for testing and debugging, auditing, and reproducibility of agent behavior.

MCP Use Cases

MCP AI agents' protocol is indeed futuristic, it can help in providing a strong base for ensuring effective AI agents' interaction in a multi-agent environment. To help you gain a better understanding of MCP use cases, we have listed some areas where the integration of MCP can prove to be revolutionary:

Personal AI Assistant Automation

Personal AI assistants have gained significant popularity post-AI revolution, but with the integration of AI agents that follow the MCP, we can experience enhanced daily productivity. These AI agents, adhering to MCP, automate daily tasks by controlling the desktop and browser to execute the user’s required task securely and precisely.

Example:

With AI agents like this, users can get their daily tasks done like reading the calendar, opening Gmail, finding a meeting-related email, and replying about their availability, all under a single instruction provided to AI agents in a multi-agent environment.

Enterprise Workflow Automation

Implementing MCP agents in corporate environments can mainly help in automating workflows by performing the redundant daily tasks over multiple platforms like Slack, Jira, Trello, Salesforce, and internal dashboards, with a single instruction provided by the user. This will help in uplifting the everyday productivity, along with reducing the chances of human errors, and will upscale operations without requiring customized integrations

Example:

By integrating an AI agent in a corporate environment, it can handle tasks like checking unresolved Jira tickets, assigning a task to a team member, and sending updates on a Slack channel, without requiring constant human intervention.

Browser-Based Web Automation

With MCP agents, we can browse the internet, extract the relevant piece of information, fill the forms, and click or take the action as humans would do. This empowers AI to make interactions across platforms without even needing APIs for it.

Example:

An AI agent like this can log in to linkedin, find related job considering the requirements communicated to it, and even apply to the jobs by filling An agent logs into LinkedIn, finds job listings based on your criteria, and applies for them by using the resume and the template of cover letter you have provided it to him.

Software Testing & QA Automation

With MCP AI agents, we can get our real users' interaction tasks done with either the app or the website to ensure the quality of features and UI flow in them. This helps in improving the software quality assurance procedure as it reduces the need for redundant effort for manual testing, while performing the testing with more precision, eventually resulting in accelerating the releases.

Example:

An AI agent implemented for quality assurance tasks can effectively test each feature within the user's flow, like the sign-up form, testing button functionality, trying edge cases, logging bugs, and effectively report it to the developer to make the required changes.

Customer Support Agent Automation

AI agents utilize the MCP to communicate with the relevant CRM systems or support portals, which can resolve the tickets issued to them and provide valuable answers for users' queries. This will eventually help in enhancing the overall customer support experience by delivering prompt responses for user queries with minimal user intervention.

Example:

An AI agent can act as a support assistant by reading the problem mentioned in the ticket, checking the status of an order, finding out the reason for the delay, and looking after queries related to return and refund for any business environment where it is deployed.

Data Entry and Report Generation

MCP AI agents can also be utilized to gather data from various sources, platforms, spreadsheets, and dashboards by interacting with them. This helps in automating the effort-taking tasks of gathering the essential data, curating it accordingly, and effectively making insightful reports out of that data.

Example:

AI agents can now extract yesterday’s sales data from multiple e-commerce platforms and effectively prepare a Google sheet that provides a detailed report based on the analysis.

What is Google's Agents-to-Agents?

Google's Agents–to-Agents or A2A is an AI agents communication protocol introduced to ensure seamless interaction between AI agents in a multi-agent environment across services, devices, and platforms. This is developed to ensure Google's broader push towards creating an AI agent ecosystem, which assists users by autonomously executing their multi-step tasks involving interactions with various applications, websites, or platforms. By introducing these developments, Google is making its significant contribution to delivering futuristic innovations that bring more convenience.

How Agents-to-Agents Works?

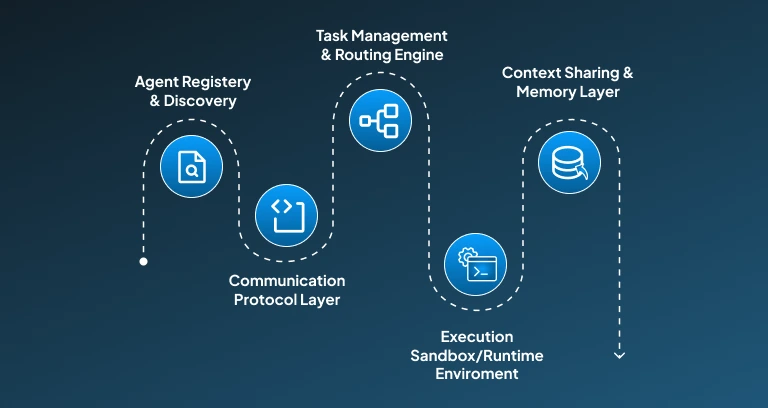

While the Agents-to-Agents protocol functions to introduce convenience, it also holds an effective architecture and methodology that helps it to ensure precise execution of provided instructions. Below, we have listed the core elements that enable the (Agents-to-Agents) A2A protocol to ensure smooth communication:

Agent Registry & Discovery Layer

At the core of A2A architecture lies a discovery mechanism that allows agents to show their presence and abilities. Here, each agents get itself registered as centralized or decentralized in a shared registry, along with some metadata describing its functions, supported tools, and APIs.

This layer functions as a service directory to help agents in finding others who can handle specific tasks. This discovery layer works quite similarly to how the domain name system or service discovery platform works in microservices, allowing the registry to ensure visibility and connection between agents and the ecosystem.

Communication Protocol Layer

Once agents are familiar with one another, they can interact effectively. Now this task is handled by the protocol layer, which formats and transports the messages between agents. This interaction task is placed in a structured format such as JSON over HTTP, gRPC, or WebSocket.

The communicated message includes task description, context, input data, and the expected response. To ensure the security and privacy of these messages, the authentication token and digital signatures are integrated in the communication pipeline, which acts as a security layer. Furthermore, we can say that this layer architecturally resembles a lot with a messaging bus, which enables event-driven interactions between agents.

Task Management & Routing Engine

One of the core elements behind the A2A protocol is the task distribution among agents. Once the request is issued, the agents check whether they can execute it or not. If they can execute the task, they forward it to the other agent, which has better abilities to perform it. This also breaks down the task into smaller tasks and assigns them to another agent in a chained sequence.

This process of checking the task and breaking it down to smaller tasks is handled by a built-in task management system, which helps in assigning logic, load distribution, and fallback handling. This resembles a lightweight workflow engine that each agent utilizes to handle and collaborate on tasks dynamically.

Execution Sandbox / Runtime Environment

All agents in the A2A environment function within their execution sandbox or runtime environment. The isolated setup ensures that agents run their tools or scripts safely without interruption. Here, some agents might use API, others might access databases, execute webscraping, or possibly generate code depending on their capabilities.

The execution can happen locally on the device or in the cloud infrastructure. The architecture implemented here can be compared to containerized environments or modular plugin systems, where each agent has access to specific tools and resources as part of the runtime stack.

Some ecosystem also implements reputation systems or trust scores that help in determining how much confidence to place in an agent’s output. These approaches mimic zero-trust security architectures and OAuth-style authorization protocols, which provide a strong defence mechanism against malicious agents.

Context Sharing & Memory Layer

Agents collaborate to have a better understanding of the task and the user's intent behind it. This is achieved by sharing a context or memory, which allows agents to pass all the relevant information to others. This context might include user preferences, task history, or partial results from other agents.

Some systems use a shared memory structure or a blackboard style architecture where all the included agents can read and write to a common knowledge base. This ensures effective coordination between the agents and helps in maintaining the broader goal through the task chain.

Agents-to-Agents(A2A) Use Cases

Google's Agents-to-Agents can have a transformative application if it's applied in the industry environment. To help you in building a better understanding around it, we have mentioned a few essential use cases which can uplift the productivity as well as the functioning of these sectors:

Multi-Agent Task Automation

Implementation of the A2A protocol can improve the performance of multi-agent task automation, as it helps break down complex tasks into smaller and manageable ones, with each specialized agent being responsible for doing a particular task.

Example:

If you ask multiple agents to make a plan for your trip. It handles all the essential things which you will be needing for your trip, from booking a flight, finding hotels and restaurants, to planning a daily itinerary. It works as a single platform for handling things from planning to execution.

Enterprise Workflow Orchestration

If a protocol like A2A is implemented in spaces that have a multi-step workflow that requires the collaboration of different departments, then it can effectively manage the workload by performing all the tasks under a single user instruction.

Example:

If such an AI agent protocol is implemented for an HR system, then one agent may be responsible for screening resumes, another might be responsible for scheduling interviews, and the other might be handling the onboarding documentation. All these agents work in collaboration by exchanging information to ensure an organized workflow.

Customer Support Systems

Implementing the A2A protocol in customer support environments can help in providing a more personalized experience to the consumer or customers. As this protocol involves communication of multiple agents, each agent has its particular assigned task. This division of tasks based on agents' expertise ensures that each particular task is executed with better accuracy and precision, which contributes to enhancing the user experience.

For example:

If a multi-agent solution is implemented for handling refund requests of an organization, then one agent may be held responsible for validating the request, another might be looking after the refund process, and the third agent might be updating the customer record and sending follow-up emails. By the implementation of A2A, agents can work in sync, which ultimately helps in providing more responsive customer support.

Developer Tools & Software Automation

By implementing the A2A protocol for AI agents' interaction, software developers can experience an enhanced workflow. Since such agents can perform the complex software development life cycle under a single user's instruction.

Example:

An AI agent deployed in a software development workflow might have one agent looking after code generation, whereas as the other agent might be running the test, and a third agent might be handling the deployment, and the last agent might be used for identifying production errors. By applying this protocol, they can get a complex task done by utilizing minimal human effort.

A2A vs MCP: Who is Leading?

To help you out in concluding which one among Google’s A2A and Anthropic’s MCP is delivering the best performance for your particular use case, we have provided their comparison in the table given below:

| Category | A2A | MCP |

|---|---|---|

| Communication Style | Synchronous, conversational (natural language dialogue) | Asynchronous, structured (shared context files) |

| Architecture Type | Multi-agent chat interface | Shared memory (context.json) orchestrator |

| Ease of Integration | Experimental, less tool-friendly today | Tool/app integration via shared context |

| Creativity & Reasoning | Strong emergent behaviors and dynamic debate | More linear, deterministic output |

| Task Complexity Handling | Great for complex, multi-perspective tasks | Great for structured, procedural workflows |

| Scalability | It can get noisy with more agents | Highly scalable via modular context layers |

| Memory Persistence | Temporary, session-based memory | Long-lived, updatable memory structures |

| Current Adoption | Primarily in research demos | Integrated in Claude apps, CRM bots, OSS tools |

| Flexibility in Orchestration | Natural, human-like orchestration | Clear, rules-based orchestration |

| Industry Readiness | Natural, human-like orchestration | Actively deployed in business products |

A2A vs MCP

Where Google's A2A is excelling in terms of creative reasoning and flexible agent collaboration, it's currently still largely experimental. On the other hand, Anthropic's MCP delivers a scalable architecture that has persistent memory and an amazing ability for real-world adoption, which helps it in positioning higher for being considered for deployment and integration to cater to industry needs.

The Future Efforts of A2A and MCP

We might witness A2A advancing itself to provide enhanced real-time coordination, creative collaboration, and agent autonomy in a dynamic environment. Whereas as MCP is more likely to work on uplifting long-term memory, context reasoning, and integration across the ecosystem. While both competitors are working hard to empower next-generation AI agents that could operate independently, the real challenge for them is to ensure a safe and ethical deployment of it that adheres to all the set standards.

Is LangGraph a similar approach?

LangGraph does share some similarities with the MCP and A2A AI agents protocol, as it enables multi-agent collaboration, memory sharing, and integration with other tools. Like MCP, it allows AI agents to share context, and like A2A, it extends a coordinated workflow between the AI agents. While holding these similarities, LangGraph also differs from them in some ways, as it possesses a graph-based fixed structure and deterministic path, whereas MCP and A2A have dynamic and adaptive agentic communication across broader ecosystems and tasks.

Conclusion

The rise of agent protocols marks a major turning point, from isolated AI models to ecosystems of intelligent, collaborative agents. Whether it’s Google’s A2A with its task-driven orchestration or Anthropic’s MCP with its shared context and memory, both are pushing the boundaries of what autonomous systems can do.

The real answer about leading the race might not be “either/or” but “both,” leading in different arenas. A2A is already making strides in real-world task automation, while MCP is shaping the future of multi-agent reasoning and long-term collaboration. It will be exciting to witness how AI agent protocols will impact in shaping of the future of AI agents for industry applications.

Do you want to know how integrating an AI agent-based solution can uplift your business operation? Hurry up! Discuss with our experts at Centrox AI by booking your first free discussion session.

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.