Evaluating GPT-4.1 for Chatbot Development: How It Enhances Conversational AI

Evaluating the GPT-4.1 model on some particular benchmark for chatbot development, learning how it works, and how it is improving the chatbot's conversation.

6/27/2025

artificial intelligence

9 mins

After OpenAI introduced GPT models in 2021, we have seen rapid advancements in technology, especially in Artificial Intelligence. These innovations are not only driving convenience but its also optimizing the time, resource, and effort consumption for handling daily tasks, especially related to conversational extending support.

With the introduction of GPT models, we witnessed how it transitioned the processes and perhaps the choice of users for preferring tools to get their tasks done. The GPT-4.1 was released on April 14, 2025. It gained attention because of its powerful multimodal AI model capabilities, which have improved accuracy, creativity, reasoning abilities, and support significantly longer context windows.

Let's take a deeper look at GPT-4.1 model by learning how it works, how it's different from others, and evaluating how it's good for chatbot development. This will help you in deciding how you can utilize the potential of GPT-4.1 model for your specific chatbot development application.

What is GPT-4.1?

GPT stands for Generative Pretrained Transformer, which is an artificial intelligence model introduced by OpenAI that can basically understand, process, and generate human-like text based on the inputs that are provided to it.

This generative AI model is crafted to predict the next word within a sequence, which enables it to write comprehensive essays, answer questions, generate code, translate languages, and more.

While GPT-4.1 is among one of the most advanced models released by OpenAI, it is continuing the strengths of previously introduced models GPT-3.5 and GPT-4. This is specifically introduced to address the needs that mainly revolve around enabling AI to have reasoning abilities, more accurate code generation, and, most importantly problem problem-solving abilities. This GPT-4.1 excels in readability and consistency, making it a preferable choice for developers and enterprise applications.

How is GPT-4.1 Different from Others?

While GPT-4.1 is continuing to deliver excellence right after its introduction, by showing exceptional abilities to optimize the workflow, to help you in drawing a better comparison, we have provided you with a table below that highlights the parameters that differentiate it from previously introduced GPT models.

| Feature | GPT-3(2020) | GPT-3.5(2022) | GPT-4(2023) | GPT-4.1(2024) |

|---|---|---|---|---|

| Multimodal Input | Text-only | Text-only | Text + Image input (in GPT-4 with Vision) | Multimodal |

| Context Length | 2K–4K tokens | Up to 4K tokens | Up to 32K tokens (standard) / 128K tokens (in GPT-4-turbo) | Up to 1 million |

| Reasoning Ability | Basic logic, struggles with complex reasoning | Better performance on logic and math | Advanced reasoning, math, and understanding of complex instructions | Advanced, more accurate reasoning |

| Coding Skills | Basic code generation | Much better at coding, used in early GitHub Copilot | Even stronger coding, better understanding of multi-step code problems | Excellent, high accuracy in generation |

| Creativity | Basic storytelling and writing | Slightly more coherent writing | More creative, nuanced, and expressive writing capabilities | Balanced and context-aware creativity |

| Factual Accuracy | High hallucination rate | Reduced hallucinations | Much more accurate, grounded, and factual | Improved reliability and correctness |

| Memory | No memory | No persistent memory | ChatGPT with GPT-4 can use memory (remembers facts about you) | Yes (enhanced memory in rollout) |

| Image Understanding | Not supported | Not supported | Can interpret charts, screenshots, diagrams, and photos (GPT-4 Vision) | Not supported |

| System prompting | Not supported | Partially via prompt engineering | Fully supports system-level behavior customization | More flexible system instructions |

| Availability | API and Playground | API, ChatGPT Free | API, ChatGPT Plus ($20/month), integrated into Microsoft tools | Available in API (text-only focus) |

| Tool Use Support | No tool support | No tool support | Supports tools: Browsing, Code Interpreter, DALL·E, etc. (in ChatGPT Pro) | Yes (via API, depending on setup) |

| Use in Products | Playground, early API apps | ChatGPT (Free version), Copilot | ChatGPT Plus (paid), Bing Chat, Copilot, and many advanced AI applications | Used in developer tools, APIs |

Difference between the ChatGPTs

How does GPT-4.1 work for Chatbot Development?

GPT-4.1 is showing great abilities for generating reasoned answers and excellent code for your particular requirements. This reflects that it has achieved good benchmarks for generating precise, consistent, and contextually accurate responses, especially if it is utilized for use cases like chatbot development. To help you in building a better understanding of how GPT-4.1 works layer by layer for chatbot development, we have provided you with the detailed architecture below:

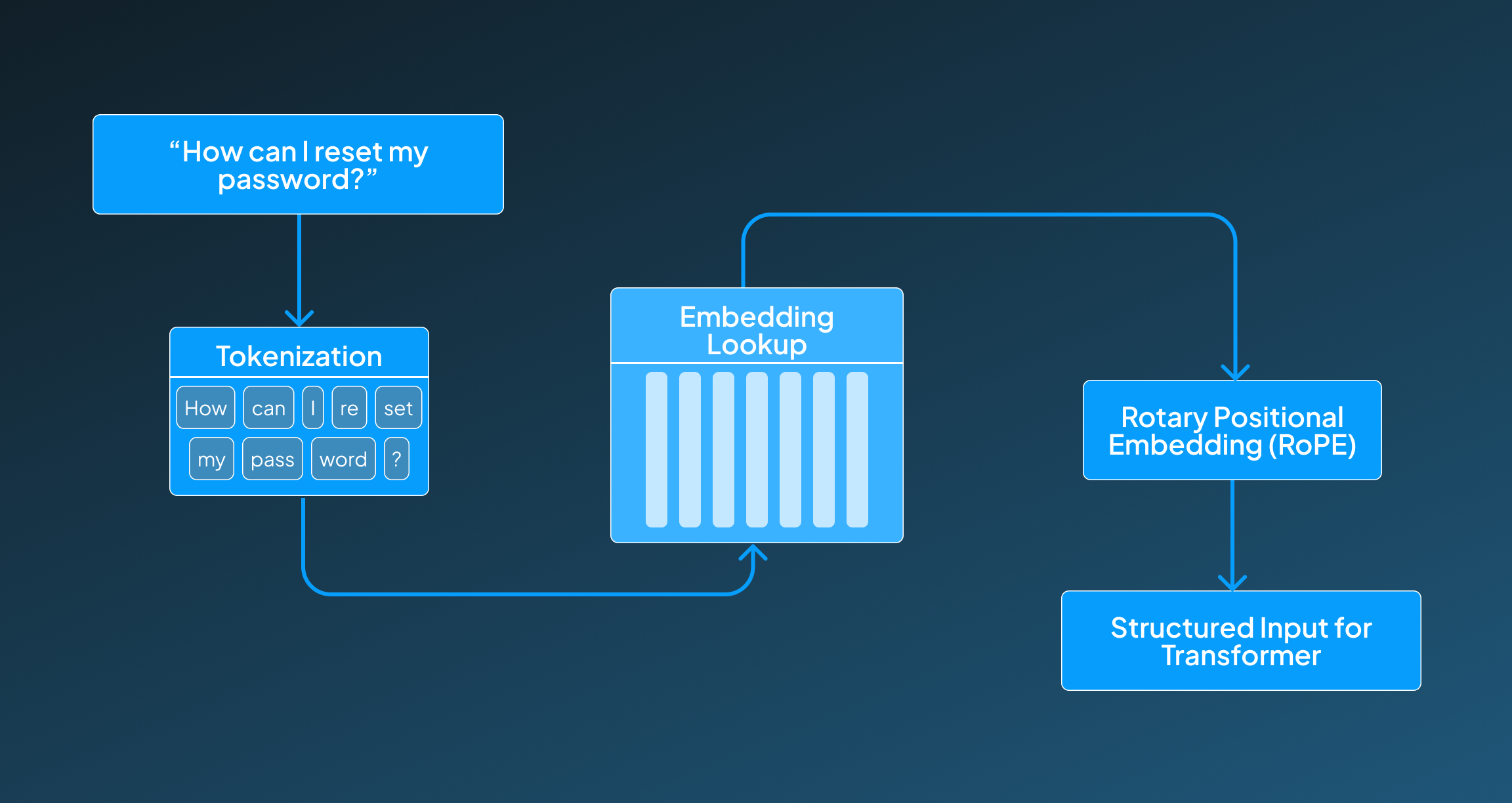

Text Embedding Layer

This text embedding layer of the GPT4.1 model works to convert the raw text data into a vector embedding so that it can be processed by the transformer. By vector embedding, we mean to convert this raw word into a combination of numbers that a computer can understand, and eventually draw a relationship between these words to make sentences for a response.

How it works:

This text embedding layer has three steps going under it, which help it in delivering the required functionality.

- Tokenization: So, for tokenization, it uses Byte Pair Encoding (BPE) to separate the given input text into subword tokens.

- Embedding Lookup: Then, each of these individual tokens is mapped into a learned embedding vector.

- Positional Encoding: Finally, for positional encoding, it uses Rotary Positional Embeddings (RoPE) to preserve the right word order and contextual flow.

This layer helps in structuring the input, which can help in reasoning later, being made by the transformer layer.

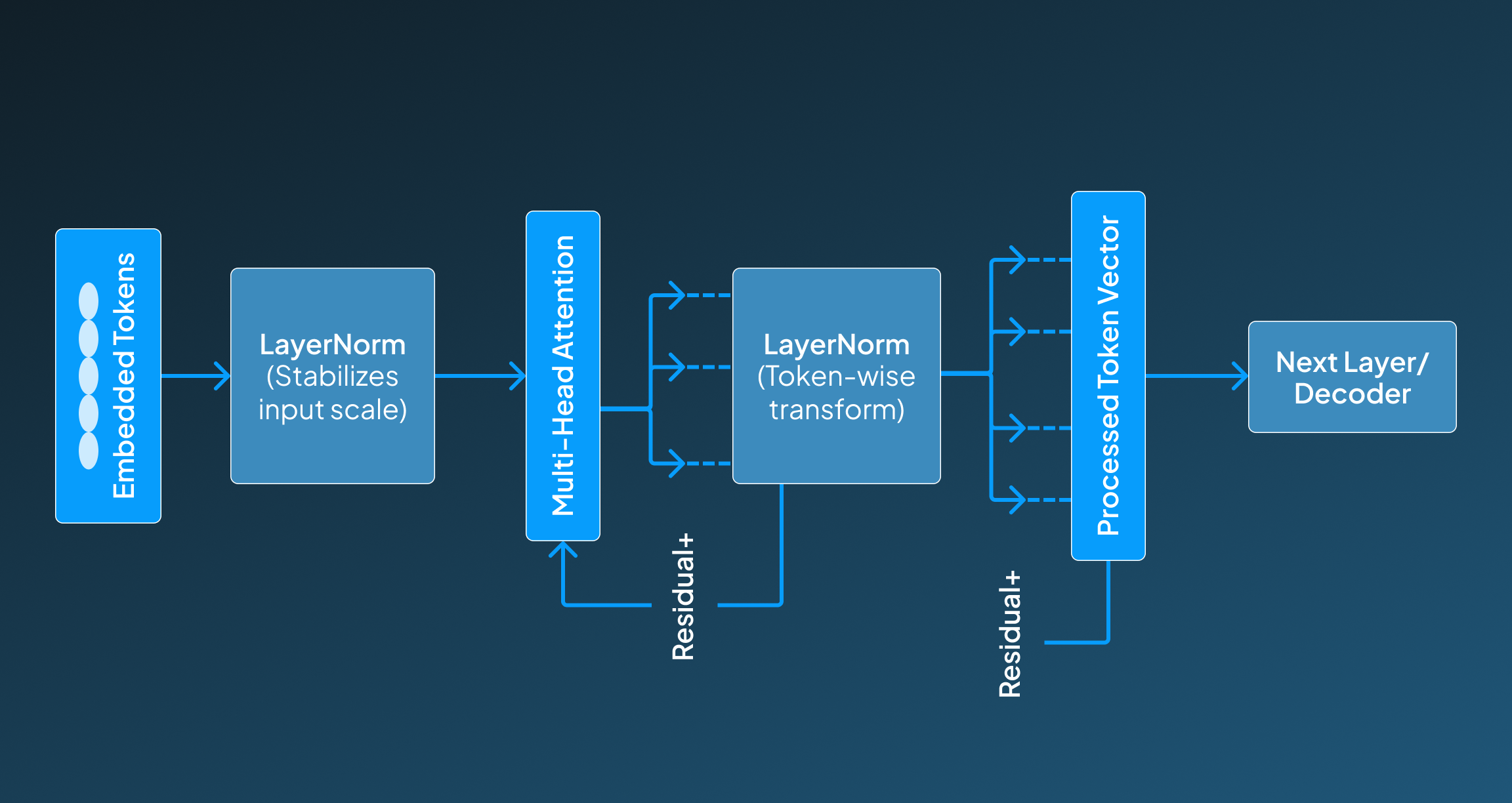

Transformer Backbone (Core Language Model)

The transformer layer in GPT-4.1 works as a primary engine for predicting reason, which has the dialog flow, and eventually the contextual understanding too. This layer enables in delivery of responses that are not only thought-based, but also follow the flow and language comprehension, making it extremely reliable.

How it works:

- Layer Normalization (LayerNorm): This transformer layer has a decoder-only architecture, which predicts the next token using the previous context. Before sending it to the attention and feed-forward layer for ensuring standardized training, it goes through a normalization layer to ensure consistent signal flow through deep networks.

- Multi-Head Attention: This step allows the model to focus on different components of inputs simultaneously to capture the underlying relation in parallel.

- Feed-Forward Layers: The feed-forward layer is placed right after attention to refine the representation of the token using the learned transformation, and eventually helps it to reason better.

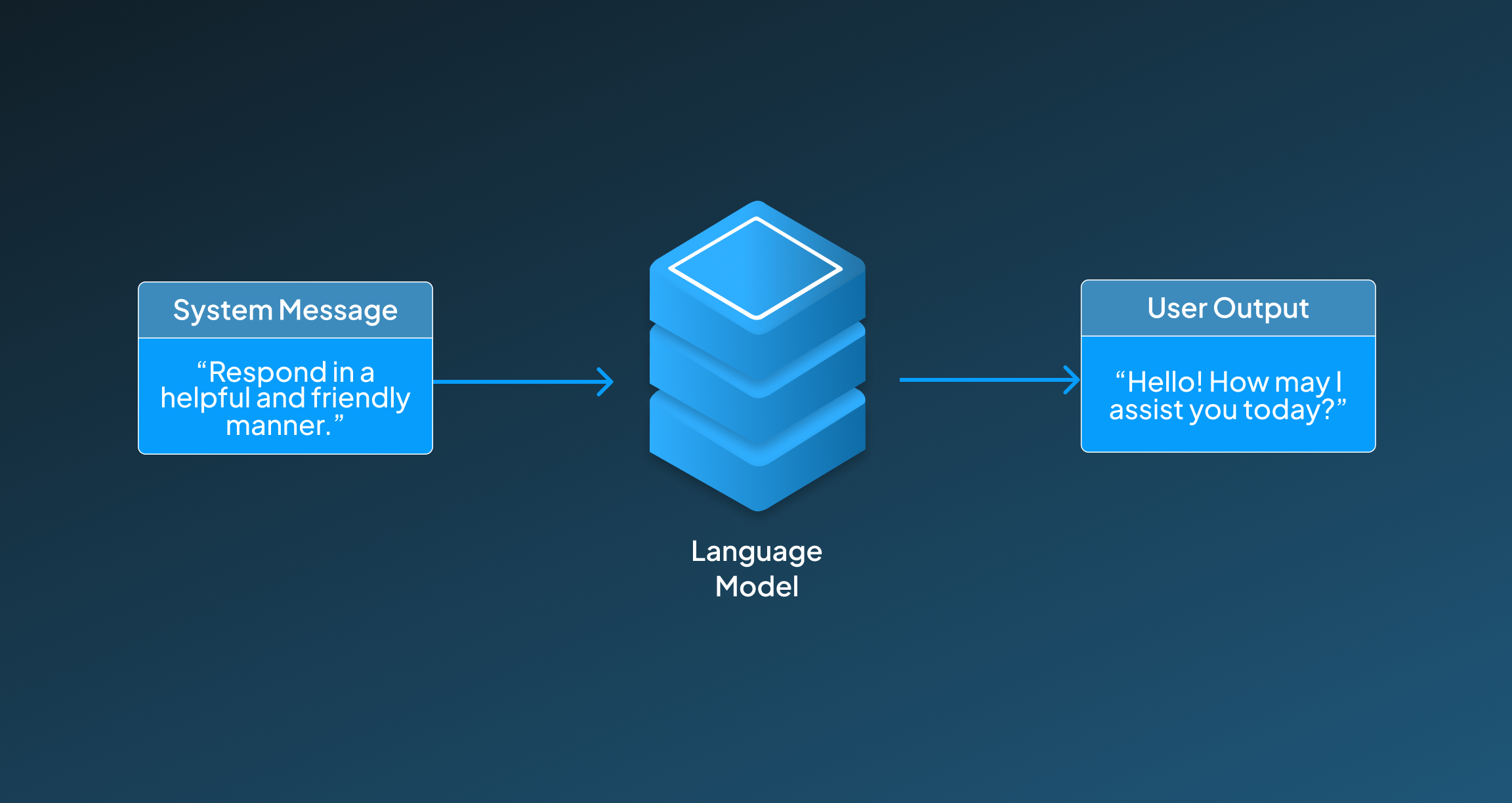

System Prompt & Instruction Handling Layer

This system's prompt and instruction handling layer of GPT-4.1 allows developers to maintain the behavioural characteristic, tone, and persona by using the already structured prompts. This ensures that the response of your chatbot remains consistent throughout the chat or conversation.

How it works:

- Behavior Control through System Messages: To ensure consistency in the tone, the developers prepare system-level prompts that instruct the model to follow a particular tone, style, and role.

- Persistent Instruction Handling: Then this system of prompts stays active to generate responses following the required standards, unless it has been overridden later within the interaction.

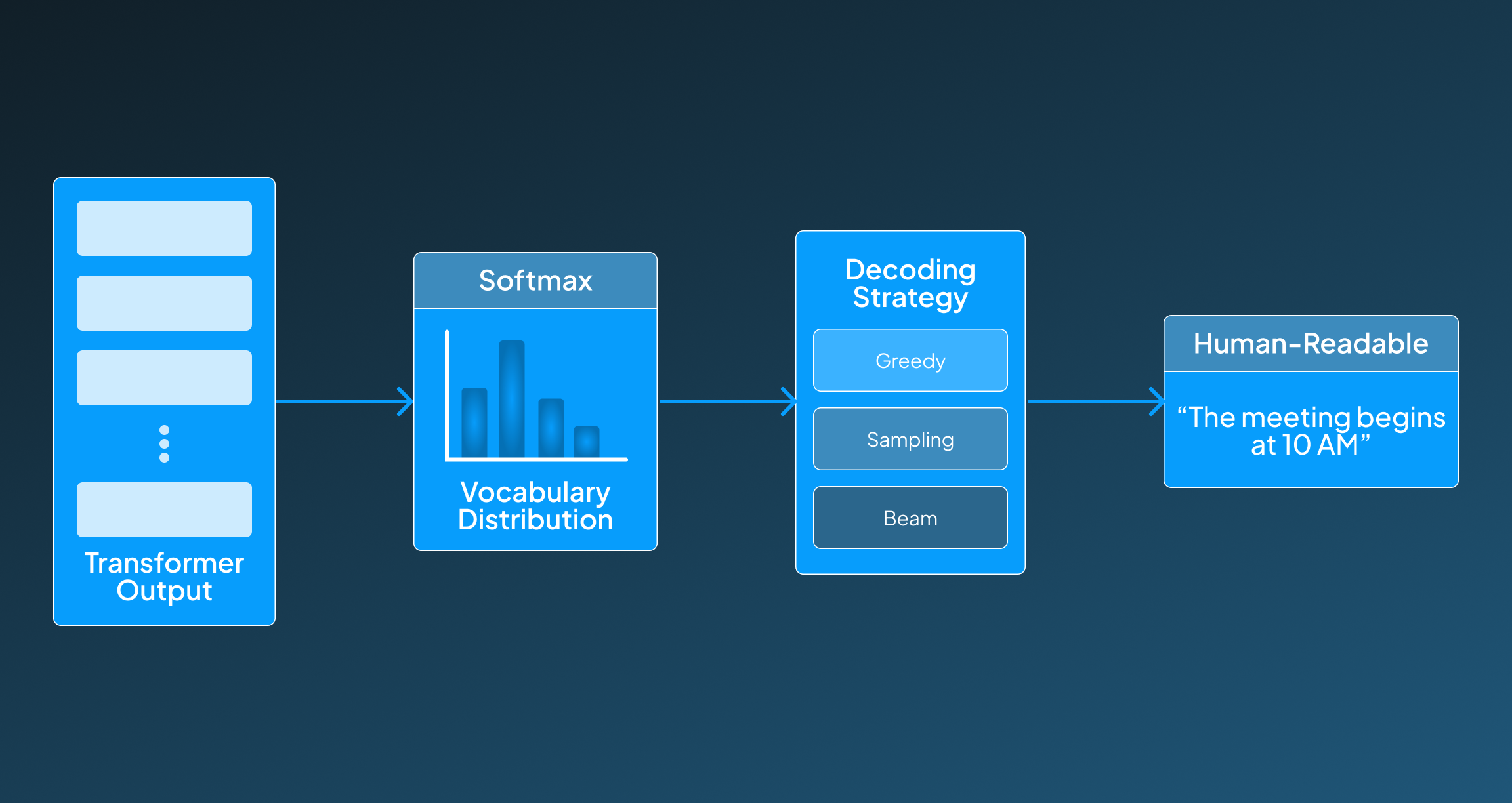

Output Decoder Layer

The final layer that converts this whole thought procedure into human-readable responses is the output decoder layer. This streamlines the processing in the form of coherent replies that are factually correct, consistent, and most importantly, address the expected query.

How it works:

- Softmax decoder: This layer has a softmax decoder that helps in selecting the most likely token that should be placed next in the stance from the vocabulary distribution.

- Greedy, Sampling, or Beam Decoding: This controls the generation style, allowing the generation to either be deterministic or creative.

- Token post-processing: Finally, to create human-readable responses, subword tokens are transformed into sentences that everyone can read and understand.

Evaluating GPT-4.1 for Chatbot Development

Now that you have got the idea about how GPT-4.1 works for chatbot development, and helps you in delivering a chatbot that meets user expectations. Let's have a deeper look at it by evaluating some particular benchmark of GPT-4.1 that makes it suitable for chatbot development.

| Benchmark | What It Tests | Importance | GPT-4.1 Performance |

|---|---|---|---|

| MT-Bench | Multi-turn conversation quality | Very High | GPT-4.1 excels in maintaining context, coherence, and role consistency across turns. |

| AlpacaEval | Instruction following and helpfulness | Very High | Delivers accurate, clear, and well-aligned responses to diverse prompts. |

| Arena Leaderboard | Human-rated conversational performance | High | Consistently ranks among the top in Elo-rated preference evaluations. With an Elo score of 1408. |

| HHH Framework | Helpfulness, harmlessness, honesty | Very High | Strong performance in generating safe, honest, and non-toxic replies. |

| RLHF Evaluations | Human preference alignment | Very High | Fine-tuned with Reinforcement Learning from Human Feedback, ensuring high alignment. |

| MMLU | Multidisciplinary knowledge (57 subjects) | Medium–High | GPT-4.1 shows broad domain coverage with high factual accuracy in varied topics. |

| HELM | Holistic evaluation (robustness, fairness, etc.) | Medium–High | GPT-4.1 is balanced across robustness, reasoning, and generalization. |

| TruthfulQA | Tendency to generate truthful information | Medium–High | High truthfulness score, minimizing hallucinations in fact-based queries. |

| HumanEval / MBPP | Code generation (if the dev-focused chatbot is needed) | Medium (Use-case-based) | GPT-4.1 performs very well in code-related tasks, useful for developer chatbots. |

| GSM8K / MATH | Math reasoning | Medium (Use-case-based) | Good performance for general reasoning, though GPT-4o may surpass it in math-heavy use. |

| BIG-Bench | Uncommon edge-case tasks | Low–Medium | Performs well, but not the focus area for chatbot development |

Evaluating GPT-4.1 for Chatbot Development

From this provided table, we can see that GPT-4.1 is well-suited for chatbot development, particularly for use cases where long-form reasoning, safe and helpful behavior, and consistent conversation quality are important. It essentially shows good performance for some critical benchmarks, which makes it appropriate for text-based assistant deployment.

Applications of GPT-4.1 For Chatbots in Particular Domains

This GPT-4.1 model can be used for making chatbots that address the needs of a specific industry, which makes it helpful for application that needs conversational responses with the most accurate, precise, and concise responses. Some areas where the application of GPT-4.1 can be revolutionary are listed below:

Customer Service Automation

This GPT-4.1 model can be used for applications like customer service automation, as with its ability to reason and compute thought-based responses for user queries, it becomes appropriate for generating human-like conversation which resonates the same consistency, tone, alongside is accurate.

Education & Tutoring

By acting as a personalized learning assistant to explain concepts, quiz students, and provide study plans across subjects, GPT-4.1-driven chatbots are contributing to enhancing the study workflow. Its advanced reasoning, long-context support, and structured explanation abilities make it ideal for adaptive and interactive learning.

Healthcare Support

Such GPT-4.1-powered chatbots can be used for answering common patient questions, managing appointment bookings, and providing medication or insurance information. As this model ensures to provide high factual accuracy and alignment with safety principles makes it reliable for a sensitive, compliance-focused conversation environment.

HR & Employee Support

GPT-4.1 can be used for making a chatbot that can address the queries related to HR and employee support, which mostly revolves around policies, onboarding, training resources, and leave management. Here, GPT-4.1 plays a very essential role in maintaining tone consistency and handles internal documentation effectively with its long-context capabilities.

Legal Assistance

A chatbot powered by GPT-4.1 can be used for legal support as it explains legal terms, procedures, and document summaries in plain language, making it understandable for everyone. This GPT-4.1 model excels in instruction-following and producing clear, non-biased explanations, helping users understand legal content safely.

How is GPT-4.1 enhancing Conversational AI?

GPT-4.1 is contributing essential advancements for conversational AI by enabling it to make more natural, reliable, and contextually accurate responses, ultimately improving the interaction. This model has addressed the limitation of the previous one, which makes it efficient for applications like chatbot development.

- Improved Context Handling: This GPT-4.1 model holds the ability to support up to 128K tokens to ensure longer and coherent conversations.

- Advanced Reasoning: With this GPT-4.1 model, you can get responses that are more thoughtful, logic-based, and have knowledge across diverse topics.

- Better Instruction Following: The GPT-4.1 Adapts accurately according to the provided prompts, user tone, and role-based instructions.

- Higher Factual Accuracy: By reducing hallucinations, this GPT-4.1 model makes replies that are more reliable and informative.

- Consistent Dialogue Flow: This GPT-4.1 model maintains context and character across multi-turn interactions, which helps in delivering throughout the conversation.

- Human Preference Alignment: Being trained with RLHF allows this GPT-4.1 model to respond in helpful, honest, and safe ways, which tries to resemble how humans would respond.

- Efficient Performance: This GPT-4.1 model is definitely faster and more cost-effective compared to earlier GPT-4 versions since it offers a significant performance boost.

- Customizable Behavior: By holding a customizable behaviour, it supports system prompts for tailored chatbot personality, tone, and requirements.

Conclusion

GPT-4.1 marks a major step forward in chatbot development by combining the power of reasoning, high factual accuracy, and exceptional conversational depth. The support it extends for long context windows, precise instruction following, and alignment with human preferences makes it an impactful introduction. It delivers reliable, natural interactions across domains like customer service, education, healthcare, HR, and legal support, making it very integral for such applications.

Although it lacks multimodal input, its text-based capabilities are finely tuned for scalable and intelligent communication. By evaluating the performance across each benchmark, this GPT-4.1 model has proved itself as a top model for meeting the needs of conversational AI chatbots. This model ensures safe and context-aware chats that address the growing demands of modern Conversational AI applications.

Are you thinking of making a chatbot for your specific industry use case? Discuss with our experts at Centrox AI, and let's make excellence possible.

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.