GPT-4.5 Use Cases in Chatbots: Revolutionizing Customer Interactions Across Industries

Explore how GPT-4.5 powers smarter chatbots, enhancing customer interactions across healthcare, e-commerce, banking, travel, education, and more.

6/25/2025

artificial intelligence

13 mins

AI-driven innovations, particularly chatbots, have emerged as one of the most empowering advancements in recent times. With their impressive ability to interact and answer all queries, chatbots are gaining users' trust and ultimately becoming an essential part of their everyday workflow. OpenAI has been making significant strides in this domain, and through the introduction of GPT-4.5, it's effectively addressing the growing needs of customer interaction across industries.

With these growing demands and expectations, we can see these LLMs becoming a major component driving the productivity and efficiency of individuals as well as organizations. These models like GPT-4.5 are not just bringing convenience, its joining the gap between possibilities, striving to become more accurate and precise than ever.

Through our article, we will help you learn the GPT-4.5 model in detail, understand its core architecture, benefits, and some essential use cases for transforming customer interaction, and the limitations that this model has. So that you can decide that if this GPT-4.5 model suits well for your needs.

How Chatbots are Impacting Interaction?

Post the introduction of AI-driven chatbots, we have observed enhanced user experience with each passing day. Whether a chatbot is for customer support, education, coding, legal, or healthcare assistance, we have seen the number of users increasing every day, indicating growing users' trust and interest.

As these AI-driven chatbots are becoming smarter, by answering the user's queries with the most relevant, precise, and factually correct piece of information within in few seconds. This essentially cuts short the time for searching, enhancing user experience, and making the execution of tasks faster and efficient. This aspect is endorsed by the statement of Fei-Fei Li (Co-Director of the Stanford Human-Centered AI Institute), who said that:

“AI-powered chatbots are transforming digital interactions by delivering real-time, context-aware assistance. Their ability to understand intent and respond accurately is not just improving user experience—it’s redefining how we access knowledge and support.”

So, in the future, we can see AI-driven chatbots not only making access to knowledge and support convenient, but also pushing themselves to support your decision-making for your complex workflow.

GPT-4.5

GPT-4.5 is an advanced model of OpenAI released on February 27, 2025. This model exhibits excellent performance in delivering fast, accurate, precise, and context-aware responses compared to the version released before it. Due to its reliable performance, it has attained significant trust in the industry for implementing it for solutions across education, healthcare, legal, and customer support chatbots, driving efficiency with convenience.

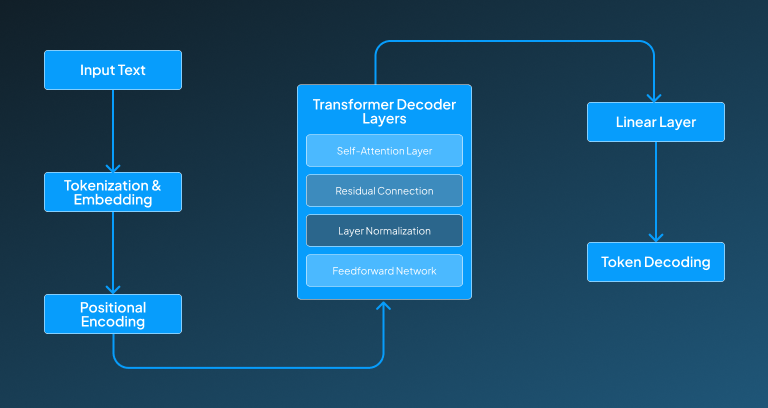

Base Architecture of GPT-4.5: Transformer Model

GPT-4.5 architecture is pretty much like GPT-4 and GPT-3. This model includes a transformer architecture, introduced by Vaswani et al. (2017). This implements a decoder-only autoregressive model. It uses a self-attention mechanism, allowing it to generate one token at a time by considering the context of previous tokens. Below, we have broken down each process that happens in the core architecture of the GPT-4.5 model:

Positional Encoding

As the transformer model naturally lacks the ability to inherit sequential information, with the help of positional encoding, it injects the logical and correct order of words in the model, eventually assisting it in distinguishing between the different meanings a sentence could have just by shifting the order of a single word.

Self-Attention Layers

So the self-attention layer in the architecture of GPT-4.5 enables the model to consider each word in the given sentence when generating a response, which helps it in understanding the deeper context and the relationship between distant words more effectively.

Residual Connections

The residual connections work to bypass layer outputs to the next layer. This layer effectively contributes to saving the information by preventing vanishing gradients and helping the deeper models to train themselves more effectively to generalize better on complex responsibilities.

Layer Normalization

The normalization layer present in the GPT-4.5 model serves to standardize the inputs within the individual layer. This helps in stabilizing the learning process, speeding up the training, and ensuring smooth gradient flow so that the deep neural network can show better convergence.

Feedforward Networks

Then, in this transformer architecture of the GPT-4.5 model, we have the FeedForward layers that apply a non-linear transformation after the attention operation, helping in expanding the model's capacity and enabling the network to capture more complex underlying patterns and representations in the data being processed.

Why is GPT-4.5 Good for a Chatbot Application?

This GPT-4.5 holds a strong base architecture that helps it contribute the required performance for industry applications. But, the real question is still around its ability to meet the expectations for a chatbot application about different industry spaces. For your assistance, we have listed some reason that makes this GPT-4.5 model a perfect fit for your particular use case.

Contextual Understanding

This GPT-4.5 model holds a context length of 128K tokens, allowing it to efficiently keep track of the conversations or interactions made with it, resulting in the generation of responses that are more coherent and ideal for multi-turn dialogues and long support sessions for users.

Emotional Intelligence

GPT-4.5 demonstrates improved emotional awareness, allowing it to detect sentiment and tone for more empathetic responses. This makes it ideal for chatbot use in sensitive domains like mental health, customer service, and education, where emotionally intelligent replies enhance user trust, comfort, and overall conversational experience.

Fast and Relevant Responses

This GPT-4.5 model offers low-latency performance, ensuring fast, contextually accurate, and factually validated answers for your complex queries. This makes it extremely appropriate for using this model in a chatbot application for customer support, where delivering rapid and relevant responses to customer queries is very critical.

Advanced Reasoning Capabilities

The advanced reasoning abilities of GPT-4.5 enable it to make complex logical deductions. By understanding the expectation behind the user query, it generates well-thought-out responses that have the required depth and clarity.

Versatile Across Domains

As this GPT-4.5 model is trained on a diverse dataset that covers the knowledge of different fields of life, including healthcare, finance, legal, education, and technology. This enables it to generate meaningful and useful responses for the particular user query.

Reduced Hallucinations

With the GPT-4.5 model, we can receive responses with reduced misleading or hallucinated information that would negatively impact the user experience and eventually their workflow. This effectively contributes to enhancing the reliability and trustworthiness of your industry-specific chatbot.

Multimodal Readiness

This GPT-4.5 model provides multimodal readiness by handling visual inputs and executing tasks like interpreting screenshots, diagrams, or uploaded documents. This makes it a very appropriate LLM model choice for industries that want to have user user-friendly chatbot, especially for needs like customer support.

Improved Instruction Following

The GPT-4.5 model has the capability to better understand and execute complex or multistep instructions. With this improved understanding of users' intent behind their provided query, this model provides a more structured and task-oriented response for the query.

Better Language Support

By having the ability to support a variety of languages, this GPT-4.5 essentially enhances the accessibility of any solution wherever this is incorporated. This helps in making the specific chatbot solution multilingual or localized for particular customer bases, ultimately enhancing the user experience.

Personalization Potential

This GPT-4.5 model’s context awareness offers basic personalization that includes tasks like remembering previous inputs or setting tone preferences, which makes the interaction with the chatbot sound more personalized and humanized.

Safer Conversations

The GPT-4.5 model ensures safer conversations, therefore, it has very strong content moderation and alignment safeguards that efficiently minimize the occurrence of toxic or inappropriate responses, providing safer interaction between the chatbot and user.

Integration Friendly

As this the part of GPT-4 family, it can easily integrate with OpenAI’s API and tools, making it easier for engineers or developers to customize, deploy, and scale the chatbots wherever it is used.

Use Cases of GPT-4.5 For Improving Customer Interaction

The GPT-4.5 model can prove itself to be very revolutionary, especially for enhancing the user or customer interaction experience. With its implementation in a certain industry, it cannot only streamline the industry workflows but also make it more efficient and productive.

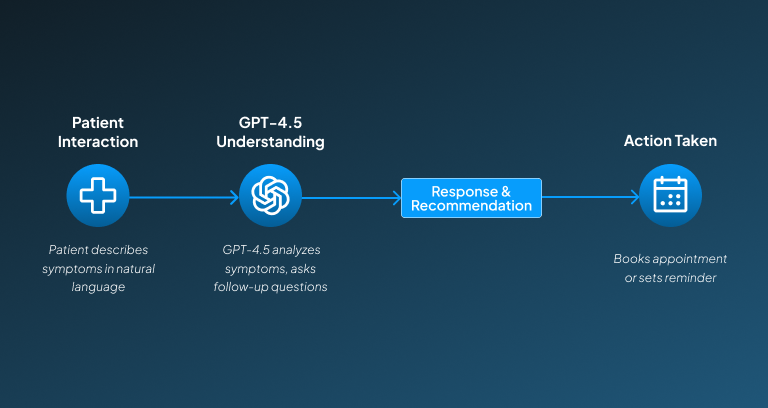

Healthcare

- Overview: In everyday scenarios, patients often struggle to communicate their symptoms because of a language barrier or might face difficulty in booking an appointment with a reliable healthcare provider. But with an AI-driven chatbot, they can have a solution that looks after this issue.

- How GPT-4.5 Works: The GPT-4.5 model addresses this by understanding the natural language symptom descriptions, asking follow-up questions, and providing likely causes or directing users to specialists. This can also schedule appointments or set reminders as per the user's instructions.

- Impact: With such a chatbot solution, we can effectively reduce the waiting time, significantly reduce the administrative load on the hospital, and make healthcare facilities more accessible.

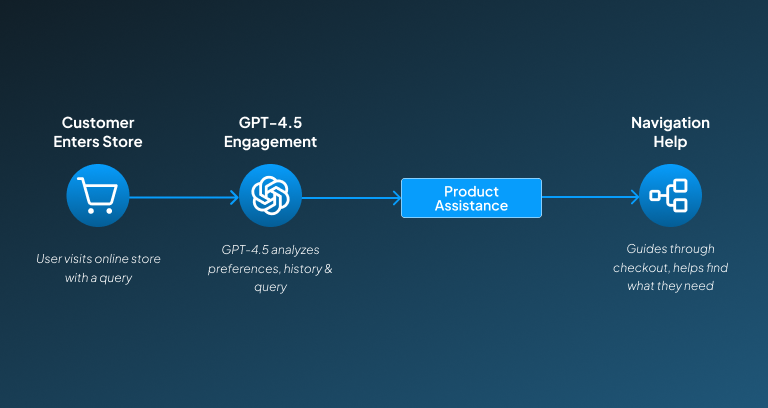

E-commerce

- Overview: customers need rapid support during their online shopping journey, which provides authentic, reliable, and relevant support for their asked questions. Having a GPT-4.5 chatbot can uplift the user experience by providing enhanced interaction.

- How GPT-4.5 Works: This GPT-4.5 model works by analyzing user queries, preferences, and previous interactions and accordingly suggests products, answers specific questions, and helps the users navigate the store.

- Impact: By implementing such a solution, e-commerce stores can witness significant boosts in user satisfaction, which also reduces cart abandonment and eventually increases conversion rates through personalized, real-time support.

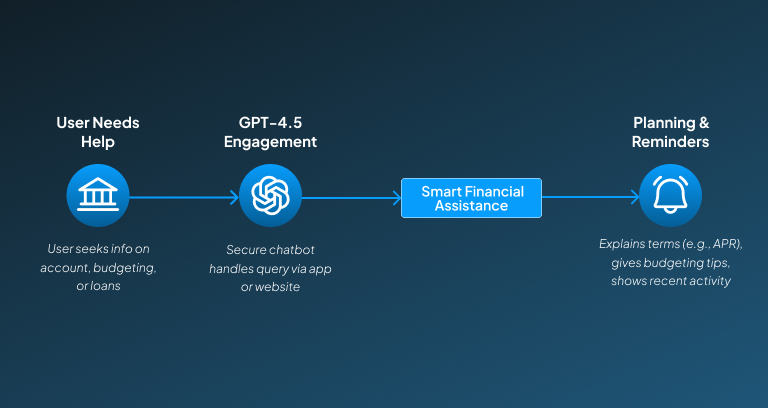

Banking

- Intro: Seeking information from the bank about account activity, budgeting, or loan options can get tiring or time-consuming because of the slow process. Here, A GPT-4.5 driven chatbot can act as a smart financial advisor.

- How GPT-4.5 Works: This GPT-4.5 model functions to handle secure inquiries, assist in explaining complex terms like APR or credit score, and eventually help in financial planning based on provided user instructions.

- Impact: Enhances customer trust, promotes financial literacy, and offloads repetitive queries from human agents.

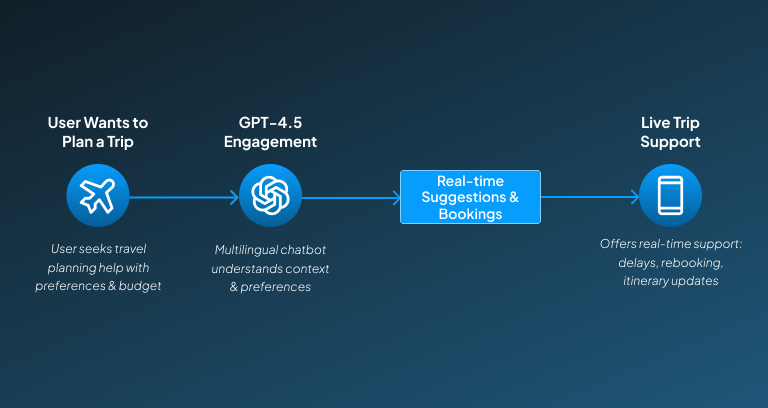

Travel & Hospitality

- Overview: Travel enthusiasts require a reliable instant solution that can help them in planning and booking their trip as per their preference and budget limitations. With a GPT-4.5-driven solution, they can get a multilingual, context-aware virtual travel agent.

- How GPT-4.5 Works: This GPT-4.5-driven solution for travel and hospitality needs works by understanding destination preferences, comparing travel options, booking hotels/flights, and providing real-time support during trips.

- Impact: A solution like this for the travel industry elevates customer experience, significantly minimizes support tickets, and helps in creating loyalty through personalized, proactive engagement.

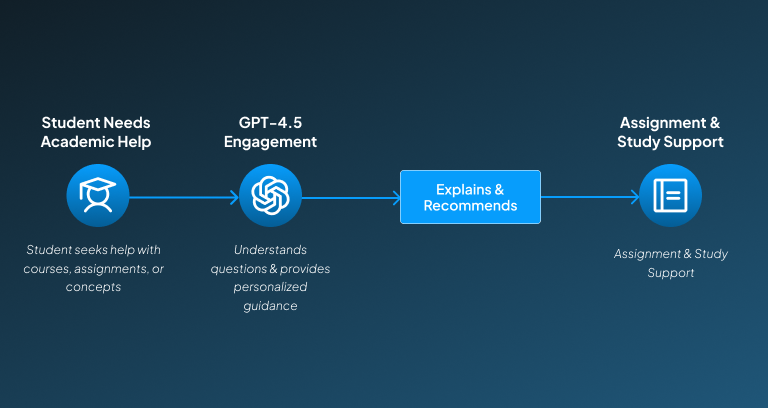

Education

- Overview: As the academic syllabus is getting tougher, students do require constant academic guidance that can help them in keeping up with the rising complexity. A GPT-4.5 driven chatbot can prove to be very helpful in this regard as it can help you with course selection, provide tutorials, to help with getting assignments done.

- How GPT-4.5 Works: The GPT-4.5 model integrated in this scenario can help the students in their study work by answering course-related queries, explaining concepts, recommending learning paths, and providing writing or study tips.

- Impact: With this application, students can be empowered with self-paced learning support, reducing their reliance on human counselors and ultimately improving academic outcomes.

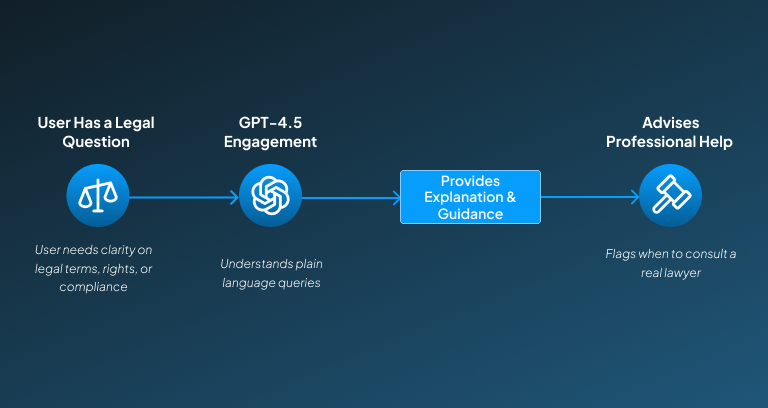

Legal

- Overview: Getting trustworthy legal support for even basic questions about contracts, compliance, or rights can be difficult. With A GPT-4.5-powered chatbot, we can have our own personalized legal advisory assistant.

- How GPT-4.5 Works: This GPT-4.5 model interprets the provided user queries in plain language, pulls the understanding from legal knowledge to explain terms or processes, and eventually offers general guidance while indicating when professional legal advice is needed.

- Impact: This application helps in improving accessibility to legal understanding, while reducing law firm intake load, and enhances client trust and support through faster, 24/7 availability.

Limitation GPT- 4.5

While this GPT-4.5 model contributes several advantages to optimize the workflows of the industries across various domains, this does hold some limitations that can impact the performance. For your understanding, we have mentioned these limitations below, so that you can plan ahead of them to avoid possible performance disruptions in your desired solution.

Limited Understanding Beyond Training Data

These GPT-4.5 models are dependent on their training data for generating responses, as they produce responses based on the pattern learned from the training data. This might result from this model struggling to answer queries that ask about new concepts, niche knowledge, or rapidly evolving domains, and might generate an irrelevant response.

Still Prone to Hallucinations

GPT-4.5 can occasionally generate factually incorrect or misleading information, especially in complex or ambiguous queries. It may confidently provide wrong answers without citing sources. Inaccuracy can erode user trust and lead to costly mistakes in sectors like healthcare, law, or education.

No Native Source Attribution

This GPT-4.5 model doesn't hold the ability to verify or cite the exact source unless it is specifically designed to perform it through some of the available third-party tools or retrieval systems. This can be concerning for applications like academic or legal tools, where the data about the information source is critical for knowing its reliability.

Lacks True Understanding

Sometimes this GPT-4.5 model might struggle with understanding concepts or language as humans would do it. This might result in generating inaccurate, irrelevant responses that might lack the information that was expected from it.

Legal and Ethical Boundaries

GPT-4.5 might unintentionally generate biased, sensitive, or unethical content in some scenarios if it's not carefully fine-tuned or moderated. By generating a response violating the ethical boundaries, it might affect high-stakes decision-making and compromise its trustworthiness.

Multi-Turn Context Limits

Although GPT-4.5 handles longer contexts (up to 128K tokens), its performance may degrade over very long or noisy conversations. In a chat-based application, this might result in generating confused, repetitive, or irrelevant responses in extended interactions.

Resource-Intensive

Running GPT-4.5 at scale consumes a significant capacity of these computational resources, which makes the solution expensive and inaccessible to smaller organizations with a lower budget.

Conclusion

GPT-4.5 is not just revolutionizing chatbot technology by enabling smarter, faster, and more context-aware customer interactions across industries; it's actually helping us step into a more futuristic world. With its implementation in healthcare, e-commerce, banking, and legal advisory, it's extending advanced reasoning, multilingual support, and the ability to handle complex queries, which will make your tool powerful enough to enhance user experience.

However, understanding its limitations is very important, as it lacks access to real-time knowledge and might show potential hallucinations, which might affect its performance. Therefore, it's important to strategically integrate GPT-4.5 so that it can automate support, boost personalization, and increase operational efficiency. As AI continues to evolve, GPT-4.5 stands as a transformative force in reshaping how businesses connect with customers through intelligent, reliable, and scalable chatbot solutions.

Feeling confused about where to start for having a personalized chatbot for your specific industry? Share your concerns with our experts at Centrox AI, and take the first step towards a more efficient future.

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.