How Structured Output from LLMs Can Revolutionize NLP Applications

Learn how organizing the received outcomes from LLMs can impact the overall learning ability of the model to enhance the performance of NLP applications.

3/4/2025

artificial intelligence

16 mins

Chatbot DesignLLMs and its applications are not only contributing reliable advancements , but it is also introducing convenience by doing various complex tasks within a few minutes. However, the key element that drives these innovations is the structured output delivered by these LLMs architecture.

While LLMs are becoming mature with each passing day in providing structured output, this is ultimately helping in transforming the applications for the Natural Language Process. By introducing such futuristic innovations we are exploring new realms.

But with our blog we will help you in building the understanding about the possibilities structured LLM output can provide for the business industry to empower their overall growth and productivity.

LLM Definition

Large Language Models is an advanced artificial intelligence application that aims to infer output that closely resembles how humans would respond. To deliver this huge responsibility these models are trained on massive amounts of data coming from diverse sources which include books, websites, articles, and more. These LLMs function by understanding the complex underlying pattern within the provided data, and analyzing it accordingly to make a relation for comprehending an accurate response.

What is structured output from LLM?

The term structured output from LLMs refers to a well organized format for representing or organizing the output rather than providing it in the format of plain text with combined sentences. These structured outputs from LLMs eases the job of application, databases or systems to process and use the data efficiently, as they might be playing a significant role in heavy applications where specific arguments are a critical requirement.

Format of Structured Output from LLM

These received outputs from LLMs can be structured in several formats, while each of the formats holds its own significance and delivers a specific benefit in particular applications. Below we have mentioned some formats structuring these LLM’s responses.

JSON Format

JSON( Javascript Object Notation) is a lightweight approach to format the received output from the LLM so that the machine or application can further process it easily. In this JSON format the received output from LLM is structured into key pair values format, in order to make the obtained output more readable and understandable for the application or machine.

Representation of JSON Format

The snippet below represents the structuring of LLMs output in JSON format in which the received output is organized in the paired form with its relevant entity type.

{

"name": "John Doe",

"age": 30,

"profession": "Software Engineer"

}

Applications of JSON Format

This JSON format of structuring LLM output integrates seamlessly with API’s, databases, and automation workflows, ultimately contributing to enhance the data retrieval, organization, and structured communication between the AI Model and applications. This format of structuring LLM outputs is very versatile is majorly used for applications like:

Chatbots & Virtual Assistants

The JSON format of structuring LLMs is utilized by applications like chatbots and virtual assistants as it helps the chatbot or virtual assistants to provide more insightful responses. It organizes the query, responses, and user data in paired format, eventually contributing to make the flow of conversation interactive.

Example Snippet:

{

"user_query": "What is the weather today?",

"bot_response": "It's 25°C and sunny."

}Example: Amazon Alexa & Google Assistant

APIs & Automation Systems

Through JSON format of structuring LLMs response is also used for APIs and Automation systems to ensure structured communications between the backend and frontend. As this supports LLM applications like generated summaries, translations, or recommendations which are sent via API in JSON format.

Example Snippet:

{

"temperature": "25°C",

"condition": "Sunny",

"humidity": "65%"

}Example: OpenWeather API

Data Analytics & Business Intelligence

This JSON format of organizing LLM responses is also very beneficial for data analytics and business intelligence applications as this format supports in structuring the reports, logs, and analytics results making it convenient for AI driven models to process dashboards for serving insights.

Example Snippet:

{

"sales_trend": "upward",

"percentage_increase": "15%",

"top_selling_product": "HP Spectre x360"

}Example: Google Analytics

E-commerce & Recommendation Systems

The JSON format for organizing the received outputs from LLMs play an impactful role in providing excellent product recommendation systems for e-commerce platforms. As this structured approach stores the relevant data in response in a paired form which helps the system in providing more personalized recommendations.

Example Snippet:

{

"user": "JohnDoe",

"suggested_laptops": ["MacBook Air", "Dell XPS 13"]

}Example: Amazon & Shopify

AI-Powered Content Generation

The JSON format of storing the data of LLMs output can be used for generating comprehensive contents like blog, report writing, and automated data curation. This format structures detailed paring of data that facilitates the algorithm in generating more contextually accurate content.

Example Snippet:

{

"title": "Top Laptops for Content Creators",

"intro": "Here are the best laptops for designers and video editors...",

"sections": ["Performance", "Battery Life", "Portability"]

}Example: SEMrush & Ahrefs

AI-Assisted Coding & Development

One of the major applications of this JSON-based LLM outputs is providing AI assisted Coding and developments. This format of structuring the output result of LLMs stores the response in an efficient paired format that helps it in making logical relations while providing response for coding requirements.

Example Snippet:

{

"language": "Python",

"code": "def greet(): print('Hello, world!')"

}Examples: GitHub Copilot

Tables Format

In the tabular format of structuring the LLM generated response, the received output is organized into respective rows and columns, where column represents the entity and the row holds the related data retrieved from the generated response. This format of structuring the data makes the analysis easy, as it facilitates in comparing attributes and extracts useful insights, making it a suitable format for structuring LLM outputs for applications which includes: analytics, finance, and research.

Representation of Tabular Format

This tabular format of organizing the LLMs response comprise a table which has rows and columns. In which the column consists of data representing an entity, whereas the row consists of the extracted data from the LLM response which is segmented into its respective column.

| Name | Age | Profession |

|---|---|---|

| John | 30 | Frontend Engineer |

| Tom | 28 | Backend Engineer |

Applications of Tabular Format

The tabular structuring of the LLMs response can have many beneficial applications for businesses, as it helps in organizing the data received from generated response in a tabular format that has significant impact for data driven applications as it makes analysis, comparison, visualization, and research more understandable.

Financial & Market Reports

Finance & Market reports are some applications where data plays a very critical role in suggesting appropriate predictions, here an appropriate methodology like tabular structuring of data from LLMs response can encourage the algorithm to make reports and prediction with greater accuracy. As it organizes the metrics, stock trends, and revenue comparisons in an easy-to-read format.

| Technology Trend | Growth Forecast (2024-2026) | Industry Adoption (%) | Key Player | Project Impacted |

|---|---|---|---|---|

| AI-Powered Chatbots | 35% CAGR | 78% | OpenAI, Google, IBM Watson | Enhanced CX & support |

| Edge Computing | 28% CAGR | 65% | AWS, Microsoft, Cisco | Faster processing |

Financial & Market Reports

Example: Bloomberg Terminal

Product Feature Comparison

Structuring the generated LLM response in tabular format can help these models in making a better understanding to draw the comparison about the particular features and specification, allowing them to provide a more comprehensive response to users when they need detailed feature comparison.

Features

| Features | HP Spectre x360 16 | Dell XPS 15 | Lenovo ThinkPad X1 |

|---|---|---|---|

| Processor | Processor Intel Core i7 13th Gen | Intel Core i7 13th Gen | Intel Core i7 13th Gen |

| RAM | 16GB DDR5 | 32GB DDR5 | 16GB DDR5 |

| Storage | 1TB | 1TB | 1TB |

| Display | 16’’ OLED Touchscreen | 15.6’’ 3.5k OLED | 14’’ 2.8k OLED |

| Use Case | Content Creators & Executives | Power Users & Designers | Business & Portability |

| Price | $1,599 | $1,899 | $1,799 |

| Review | (9/10) | (8.5/10) | (8.5/10) |

Product Feature Comparison

Example: CNET, TechRadar

Medical Reports & Diagnoses

By structuring the received output of LLM in tabular format we can help the model in comparing system, treatment plan, and patient data which can facilitate the algorithm eventually to make more insightful medical diagnosis and reports which promises reliable accuracy.

| Patient ID | Symptoms | Diagnosis |

|---|---|---|

| 001 | Cough, Sore Throat | Influenza |

| 002 | Chest Pain | Hypertension |

Medical Reports & Diagnoses

Example: IBM Watson Health

Project Management & Task Tracking

While contributing a lot of convenience for many domains, this tabular approach for structuring LLM output can also help in improving the execution of organizing tasks, as it structures the status updates, deadlines and tasks in tabular format; making it easy for the algorithm to absorb and track.

| Name | Role | Projects | Contributions |

|---|---|---|---|

| John | Project Management | Research | Prototyping |

| Tom | Engineering | Planning | Specifications |

| Peter | Design | Implementation | Research |

Project Management & Task Tracking

Example: Monday.com AI Assistant

Scientific Research

Application like scientific research involves in depth study to find and organise the useful data which can support the particular research. By structuring the responses received from these LLMs in tabular format we can help the model in building a better understanding of the research interest and then ultimately start generating responses by thinking in the particular direction.

| Parameter | Traditional Diagnosis | AI Assisted Diagnosis | Improvement (%) | Source |

|---|---|---|---|---|

| Accuracy in Detecting Diseases | 78% | 92% | +18% | Lancet AI (2024 |

| Time Taken for Diagnosis (Avg.) | 45 minutes | 10 minutes | -77% | JAMA AI Review (2023) |

| Patient Outcome Improvement | Moderate Improvement | Significant Improvement | +25% | Nature Medicine (2024) |

| Adoption Rate in Hospitals | 40% | 85% | +112% | WHO AI Report (2024) |

Scientific Research

Example: Elsevier AI

Lists

List is another approach to organize the LLMs output. This structuring approach allows the data from received output to be organized in a clear, concise, and scannable layout, making it a useful methodology for structuring LLMs output for applications which provides quick overviews, recommendations, and step-by-step instructions.

Representation of Lists Format

This list format of structuring the LLM output is further divided into two types, both have their own essential use cases.

Ordered List Format

Ordered list format of structuring LLMs output organizes the data in response into a numbered list, making it an ideal option for structuring the LLM output for processes, tutorials, and step-by-step guides. This allows it to make a more clear workflow, as it organizes the information logically enhancing its readability.

Prompt: "Steps to automate customer service using AI."

AI-Generated Response (Structured as an Ordered List):

{

"response": [

"1️⃣ Identify common customer queries and FAQs.",

"2️⃣ Choose an AI chatbot or virtual assistant platform.",

"3️⃣ Train the AI model using past customer interactions.",

"4️⃣ Integrate the chatbot with your website and apps.",

"5️⃣ Monitor chatbot performance and refine responses."

]

}Unordered List Format:

The Unordered list format of structuring the LLMs response structures the output in the bulleted format. This format organizes the retrieved data from LLMs output in a bulleted list for applications like for summarizing features, key points, and general recommendations, as this type of formatting makes the content easier to scan for rapid decision making.

Prompt: "What are the key benefits of using AI in healthcare?"

AI-Generated Response (Structured as an Unordered List):

{

"response": [

"🔹 Faster and more accurate diagnostics",

"🔹 Predictive analytics for disease prevention",

"🔹 Automated administrative tasks to reduce workload",

"🔹 AI-assisted robotic surgeries for precision",

"🔹 Personalized treatment recommendations"

]

}Applications of List Format:

This list format of organizing the LLM response is an effective way to format the output, eventually supporting the algorithm in providing a contextually accurate output to users for their specific applications. The list format of structuring the LLM output is being used for various applications with are listed below:

Step-by-Step How-To Guides

The list format of structuring the LLMs output is very impact ful for application like step-by-step guides, as it provides a structured flow to organize the LLMs output, making it easy for the model to compute a more comprehensive guide whenever the user demands for it.

Example Snippet: Microsoft Support Article – "How to Fix a Slow Windows Laptop"

if a user clicks on a fix, Microsoft Support guides them through the resolution process using a numbered list.

{

"response": [

"1️⃣ Open Task Manager by pressing `Ctrl + Shift + Esc`.",

"2️⃣ Click on the 'Startup' tab.",

"3️⃣ Identify and right-click unnecessary startup programs.",

"4️⃣ Select 'Disable' to prevent them from running at startup.",

"5️⃣ Restart your computer to apply changes."

]

}Chatbot & Virtual Assistants

Application like chatbot and virtual assistant also utilizes the lists format for structuring the output of LLMs, as it formats the received output data in an organized list which facilitates the chatbot in doing a quick scan, and provides multiple response options to users.

Example Snippet: AI Chatbot – Customer Support for an E-Commerce Store

If a customer asks, "How do I return a product?", the chatbot provides step-by-step guidance.

{

"response": [

"1️⃣ Go to 'Orders' in your account.",

"2️⃣ Click 'Request Return' on the item.",

"3️⃣ Select a reason & upload images (if needed).",

"4️⃣ Print the return label & pack securely.",

"5️⃣ Drop off at the nearest courier center."

]

}Product Comparisons

For product comparison applications the list format structures the LLM response in an organized manner, which supports the model in performing quick scans over the pros and cons, ultimately providing a detailed product comparison that will enhance users decision making.

Example Snippet: Amazon Customer Review for a Laptop

When a customer leaves a review, Amazon provides a quick summary of key points using bullet points.

{

"review_summary": {

"Pros": [

"💻 Fast performance with Intel Core i7 processor",

"🔋 Long battery life – lasts up to 12 hours",

"📺 High-resolution 4K display with vibrant colors",

"🎧 Excellent speakers for media consumption",

"⚡ Lightweight and portable design"

],

"Cons": [

"❌ Expensive compared to competitors",

"❌ Limited USB-C ports",

"❌ Fan noise can be loud under heavy load"

]

}

}Significance of Structuring Output From LLM

Structuring the LLMs response in a relevant format is very critical to enhance the overall readability, usability, and efficiency of various applications. Properstructuring of LLMs response helps the model in better organization, building a more insightful understanding, helping in performing faster processing. This aspect is endorsed by the statement of Andrew Ng (AI Researcher and co-founder of Google Brain) who says:

"AI systems are only as useful as the clarity of their outputs."

Therefore it is extremely essential to ensure that the responses computed from the LLM models are clear, actionable, and easily interpretable, with reduced ambiguity and enhanced automation.

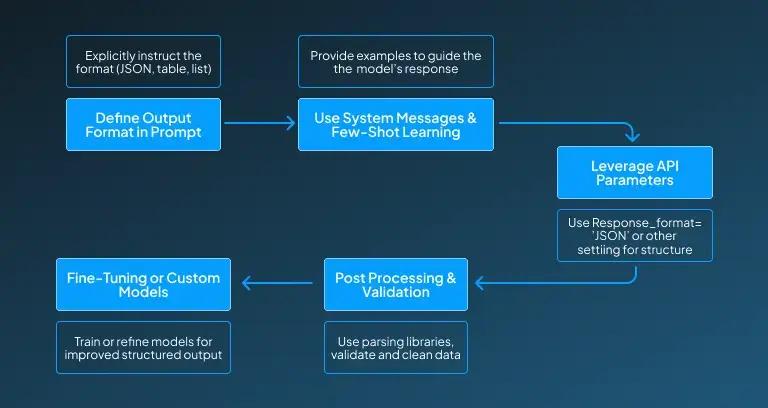

How Can We Receive Well Structured Output from LLM?

Receiving well-structured output from Large Language Models (LLMs) is a very essential requirement that helps the model in providing the desired outcome. To receive a well structured outcome from LLMs it demands a combination of prompt engineering, API configurations, and post-processing techniques. By following systematic flow we can ensure to provide clear, accurate and helpful AI-generated responses:

Define the Output Format in the Prompt

In order to receive a well structured response from the LLM model it is important to explicitly instruct the model to return the structured data. In this way the LLM model looks carefully to ensure that the output is well formatted in desired manner whether its JSON, table or List. The best way to ensure this aspects is by providing specific instructions like:

- "Respond in JSON format with keys: title, summary, and content."

- "Provide a step-by-step guide using an ordered list."

“In this way the model ensures to return the response in the desired structure which follows detailed instructions, ensuring consistency and reliability in the responses.” (Minjun Son et al., 2024)

Use System Messages & Few-Shot Learning

After defining the instruction comprehensively it is essential to add a few examples within the prompt that helps the model in absorbing the expected structure. As the model analyzes the given example closely, and encourages the model to maintain consistency and relevance. For instance, a chatbot prompt may include:

{

"question": "How to reset my password?",

"response": {

"step1": "Go to settings and click 'Reset Password'.",

"step2": "Enter your email and receive a reset link.",

"step3": "Follow the link to set a new password."

}

}Leverage API Parameters for Format Control

The next important step to provide a well structured LLM response is to set appropriate API parameters which refines the structured response. By using instructions like response_format="json" we can receive the response in our desired manner improving its readability.

Post-Processing & Validation

Once done with the above procedure then it's crucial to see how LLM generates an output. To validate and clean the data it is better to use parsing libraries (e.g., JSON parsers in. “Studies highlight that despite the exceptional ability of LLMs to deliver natural sounding responses, the concern of data memorization, bias, and inappropriate language still persist, therefore by implementing appropriate validation methodologies we can enhance the readability of outputs.” (Michael et al., 2022) This ensures outputs meet application-specific requirements, along with assuring the accuracy before integrating it with relevant applications.

Fine-Tuning or Custom Models

Fine tuning or Customizing the model to receive the specific a well structured LLM output is really important in order to help the model in providing refined results , which is more contextually aware and has the required relevance. This step holds great importance for enterprises and industry-specific applications.

Impact of Structured Outputs From LLM on Natural Language Processing Applications

By making the output received from LLM structured we significantly enhance its efficiency and overall effectiveness. As the organized data either in JSON, table, or list format allows the NLP application to make a better understanding of the gathered data, helping it give more accurate and context aware responses which leads to better automation, improved user experience, and seamless integration into various industries. Here are some key applications of NLP where structured LLM output can be very beneficial for improving the overall performance:

Chatbots & Virtual Assistants

For NLP applications like chatbot and virtual assistants structuring the LLM responses enables the chatbot to serve clear, actionable answers while enabling it to maintain the conversation flow effectively. Instead of providing the complex and lengthy paragraphs it structures them into bullets and pointers enhancing its overall usability. Structured responses improve speech-to-text processing and enable personalized responses across devices.

Below we are practically representing how structuring of LLMs output enhances readability and understanding:

Without Structure:"Your meeting is scheduled for 10 AM tomorrow. You have an unread email. Your next appointment is at 3 PM."

With Structured Output (JSON Example):

"schedule": [

{"event": "Meeting", "time": "10:00 AM"},

{"event": "Unread Email", "status": "Pending"},

{"event": "Appointment", "time": "3:00 PM"}

]

}Example: Google Assistant & Amazon Alexa use structured output for commands, schedules, and reminders.

Content Generation & Summarization

The application, like content generation and summarization generates lengthy responses like articles, blogs, reports and summaries, for which adequate structuring holds greater value. As a properly structured LLM response facilitates the model in providing readable, and catgorized information for readers.

Below you can visualize how structured LLM response is outperforming the unstructured response, as it scans key details quickly, making reviews and comparisons more effective.

Without Structure (Raw Summary):"The HP Spectre 16 is a powerful laptop with a 16-inch display, Intel Core i7 processor, and long battery life. It is lightweight and has premium build quality."

With Structured Output (Table Format):

| Feature | HP Spectre 16 Specs |

|---|---|

| Display | 16-inch OLED, 4K resolution |

| Processor | Intel Core i7, 13th Gen |

| Battery Life | Up to 10 hours |

Example: CNET & TechRadar use structured outputs for laptop comparisons and feature breakdowns.

Sentiment Analysis & Market Research

For NLP driven applications like sentiment analysis, structuring the LLM output supports the algorithm in building a better understanding of the emotion behind the output, ultimately contributing for distinguishing between positive, neutral, or negative categories. By adopting this structuring approach for LLM response businesses can quickly extract insights and refine their strategies based on user sentiment.

Without Structure (Plain Text Output):"The product is great but expensive. The design is sleek, but battery life is poor. Overall, I like it but wish it were cheaper."

With Structured Output (JSON Sentiment Analysis):

{

"sentiment_score": 0.65,

"category": "Mixed",

"keywords": ["expensive", "sleek design", "poor battery life"],

"emotion": "Positive with minor dissatisfaction"

}Example: IBM Watson analyzes social media sentiment for brands like Coca-Cola and Nike.

Healthcare & AI-Powered Medical Reports

The structured LLM responses can effectively contribute to enhancing the healthcare sector. By structuring the response we can enable the LLM model to generate more precise and comprehensive medical reports that assist doctors in analyzing symptoms, treatments, and patient histories efficiently.

The structured and unstructured representation of LLM responses given below explains how well structured output can help the doctors to access key insights instantly, speeding up diagnosis and treatment decisions.

Without Structure (Free-Form Report):"The patient is experiencing chest pain and shortness of breath. Symptoms started three days ago. ECG shows abnormalities. Recommend immediate intervention."

With Structured Output (Table Format):

| Patient Condition | Findings |

|---|---|

| Symptoms | Chest pain, shortness of breath |

| Onset | 3 days ago |

| ECG Result | Abnormalities detected |

| Recommendation | Immediate intervention needed |

Example: IBM Watson Health uses AI to structure clinical notes and diagnosis reports.

Conclusion

The structured output from LLMs can prove to be a game changer for NLP applications by making the AI generated responses more precise, contextually aware, and easily interpretable. For applications like chatbot, virtual assistant, sentiment analysis, or healthcare a well structured LLM response that has either been organized in JSON, tables, or lists format can help in uplifting the overall performance by enhancing readability and efficiency for real world usability.

This structured LLM response not only organizes the responses, but also significantly contributes in reducing the ambiguity that could possibly limit the understanding of the model in generating comprehensive and insightful automated responses for your particular needs. As AI is evolving, the structured LLM outputs will be playing a key role in building a strong foundation for enabling smarter AI solutions

If you want to implement exceptional structuring of LLM response for your business application, then get in touch with our experts at Centrox AI, and start your journey towards growth.

Muhammad Haris Bin Naeem

Muhammad Harris Bin Naeem, CEO and Co-Founder of Centrox AI, is a visionary in AI and ML. With over 30+ scalable solutions he combines technical expertise and user-centric design to deliver impactful, innovative AI-driven advancements.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.