SLM vs LLM: What is the difference between them? Which Model Has Greater Potential for Revolutionising Business AI Solutions?

Explore the difference between Small Language Models (SLM) and Large Language Models (LLM) in NLP. Discover their architecture, applications, strengths, and limitations for AI-driven businesses

1/2/2025

artificial intelligence

14 mins

The evolution in the field of information and technology, especially after the shift towards Artificial Intelligence and particularly in the domain of Natural Language Processing (NLP), has done wonders. These advancements introduced in NLP aim to generate a communication mechanism that is intelligent enough to communicate like humans.

This raises the question: What is the driving force behind NLP that enables human-like communication? This human-like communication is made possible by integrating the Small Language Model (SLM) or the Large Language Model (LLM) behind NLP. The choice between either of them to carry out your desired task depends upon the requirements of the task that is expected to be achieved by businesses.

So through our article on SLM vs LLM, we aim to help you understand that either the Small Language Model (SLM) or Large Language Model (LLM) has the better ability to provide the most efficient solution for your particular AI-driven business idea.

What is Small Language Model (SLM)?

Small Language Model (SLM) is a methodology to implement Artificial Intelligence that possesses a relatively compact architecture that enables it to require fewer resources for computations. SLM is engineered to execute specific language tasks efficiently, by utilizing the minimum resources that differentiate them from their Large Language Model (LLM) counterparts

SLM Architecture

SLM incorporates fewer parameters, which inferences that the training and deploying process for this is much simpler and prompt. They offer excellent performance for specialized tasks rather than general purpose language understanding tasks; enhancing its significance for executing your choice tasks within the limited provided resources.

Categories of SLM; With Its Practical Implementation in Industry

To improve your understanding this Small Language Model can be categorized into further types based on its architecture, purpose, and performance. So, Let's delve further into SLM by discussing its types, and the specialized functionality it offers to get the assigned tasks done.

Rule-Based Models:

As the name suggests this model works on predefined linguistic rules for language processing, rather than learning from data they tend to utilize clear-cut programming to understand the context of the text and generate a text accordingly.

Example: Initially introduced chatbots used to exercise the if-else rule to counter user inputs. Like: ELIZA at MIT, and Grammar Checkers in Microsoft Word.

Statistical Models:

The statistical model type of SLM used probabilistic methodologies to predict the next word in the sequence, to understand or generate a text. It comprises the the n-gram concept for predicting the next word in sequencing, which actually works by making n the number of words being considered to generate the next word.

Example: Using bigram where (n=2) or trigram where (n=3) model for text prediction. Like: Google Search (2000s), and T9 Predictive Text in Nokia Phones.

Neural Network Models:

The neural network architecture type of SLM, incorporates feedforward or Recurrent Neural Networks (RNN) for language processing tasks, which actually works by learning from dataset to improve their performance over time.

Example: LSTM( Long Short Term Memory) are specialised to predict the word considering a number of preceding words present in the sequence. Like: Phi-3, and MiniCPM-Llama3-V 2.5.

Task-Specific Models:

The task specific model type of SLM, aims to provide a tailored way to execute your desired task that could be: text recognition or summarization. This has been possible for them to achieve since they are fine tuned on specific dataset to perform these specialized tasks.

Example: Sentiment analysis tasks for classifying feedback as positive or negative uses this. Like: DeepSeek-Coder-V2, and Gemma 2.

Applications of SLM for Business

Now SLM has many applications that can have far-reaching benefits specially for businesses that are planning to integrate AI in their unique solutions, this will eventually help them to perform better and utilise their resources in the best possible way. Below we have mentioned some applications where implementation of SLM can actually help business to flourish:

Customer Support Automation

These SLM implemented customer support chatbots have a great potential to enhance your business performance, as it automates the repeated tasks related to: customer query solution, troubleshooting issues, and guiding them about your product, services and process, which not only saves your time, effort and energy, but also cuts down the cost spent on resources significantly.

Content Generation

As a business owner whose business needs revolve around content generation whether it's for blog posts, product description, news, articles, or some marketing material, you are always in the search of a solution that can deliver high quality content at a rapid pace. Then this SLM empowered system has the capability to meet your needs by generating coherent, concise and speedy texts that resonate well with your brand voice, which can reduce the cost spent on your business.

Language Translation

These SLM have the capability to facilitate real time translations, enabling businesses to expand their networking and reach around the world, as it provides a reliable way to eradicate the language barrier by being a great help to collaborate with people understanding different languages, and providing businesses an opportunity to enhance their global presence.

Personalized Marketing

As SLM’s possess the ability to execute specialised tasks, this capability helps it understand user preference and customise the marketing strategy for businesses accordingly by generating more targeted and engaging content; ultimately increasing conversion rates for businesses by using this AI driven solution.

Data Extraction

This AI driven SLM based solution for data extraction has great capability to enhance business productivity and revenue generation, as it can extract the crucial relevant information from unstructured data sources like: email, reports, and webpages. This saves businesses from spending extra effort and time on manual data entry for making data driven decisions.

Strengths and Limitations of SLM

While SLM can be your possible solution for bringing your idea to reality, it's important to understand the pros and cons it offers when working on it to have better clarity. Below we have discussed the pros and cons of implementing an SLM in detail:

Strengths

- Efficiency and Speed:

In this fast paced world it is the need of the hour to have such AI driven solutions that are speedy and efficient, these SLMs possess the ability to provide real time text generations which is one of the most amazing aspects it offers.

- Lower Computational Requirements:

As these SLM works on smaller architecture, therefore it requires less resources: power and memory, which makes it accessible for business with restricted resources.

- Cost-Effectiveness:

Lower computational resource requirement of SLM makes it cost effective too, as it does not require heavy expensive resources to make its execution possible.

- Simplicity and Interpretability:

This smaller architecture of SLM makes it extremely simple and easier to understand or interpret, which enhances transparency of AI applications.

- Task-Specific Optimization:

One of the most important advantages that these SLM offers is their task specific improved performance in some applications, without adding the complexity that is involved in larger models.

Limitations

- Limited Contextual Understanding:

One concerning drawback of SLM is its limited ability to understand the context because being trained on concise dataset, which may result in producing compromised outputs for complex contexts.

- Lower Accuracy and Performance:

Because of being trained on limited data these SLM sometimes tend to exhibit lower accuracy especially when larger context is involved.

- Reduced Capability for Generalization:

As SLM’s are trained on smaller and specific data, this makes it unable to offer better performance for diverse tasks.

- Potential for Bias:

Following the pattern of larger models these SLM also inherit their biases from training data, which can involve ethical concerns while working in a real environment.

What is Large Language Model (LLM)?

Large Language Model (LLM) is another implementation of Artificial Intelligence that focuses on introducing advancements in NLP. This LLM is a deep learning model that enables it to deliver tasks like language generation, translation and summarization more effectively. This model is trained on a large volume of dataset that enables it to render its services for diverse tasks, and is more likely to generate coherent text that resembles human communication.

LLM Architecture

These LLM have huge number parameters that provide it the capability to capture complex language patterns, as these are trained on large datasets which gives it an edge to handle diverse matters without being specially trained for it. However, in order to experience improvised accuracy for specific tasks it is better to fine tune LLMs.

Categories of LLM; With Its Practical Implementation In Industry

These LLMs have several categories depending on its architecture, functionality and implementation, each of these categories have the potential to offer great performance for your desired task. Below we have mentioned the categories of LLMs to provide you an overview:

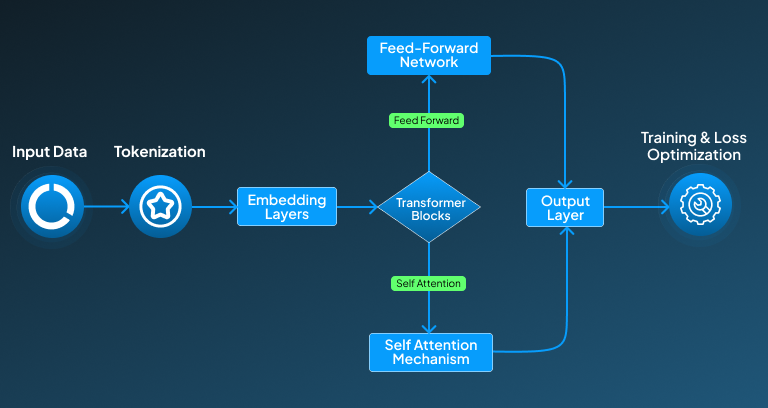

Transformer-based LLMs

Transformer based LLMs are the type of LLM models that incorporate transformer architecture for revolutionizing Natural Language Process by capturing long range patterns. It involves a series of processing that includes: self attention mechanism, multi-head attention, positional encoding, feed forward neural network, and layer normalization in order to produce a coherent human-like text.

Example: GPT series, BERT, and XLNet.

Recurrent Neural Network (RNN)-based LLMs

Recurrent Neural Network (RNN)-based LLMs is a LLM model that was introduced prior to transformer based LLMs to handle NLP, in this architecture sequence of the data secures prime importance for generating the desired output text, however this model wasn't able to hold large dependencies, which rose they need of having another architecture that can cover this limitation.

Example: LSTM and GRU

Multimodal LLM

Multimodal LLM introduces an architecture that enables the LLMs to learn and train from data across different modalities: text, video, image and audio. This helps this model to have access to diverse and rich data resulting in much more comprehensive results.

Fine-tuned LLMs

Fine-tuned LLMs are pretrained models which are assigned to perform specific tasks by narrowing down its training data sets domain, this helps the model to adjust its general knowledge in such a way to execute the specialized task with enhanced expertise.

Example: BioBERT and Legal BERT

Hybrid Model LLMs

The hybrid model of LLMs introduces a methodology of integrating multiple models of LLMs altogether in order to utilize the best strength that any model can offer, without worrying about its limitation as it can be overcome by integrating another model. This model introduces a unique architecture that ensures the best quality and accuracy.

Example: VisualBERT

Applications of LLM for Businesses

There are a lot of untapped areas in the business domain where introduction of AI specially by implementing LLM can bring revolution. This integration of LLM is not only bring convenience

Customer Support:

LLMs have the ability to enhance customer support, this large model has extended resources that enables it to be trained on large data sets helping it capture complex underlying patterns whether its for text generation, summarization or sentiment analysis.

Automated Reporting:

Automated reporting implemented through LLM has great potential to enhance your business productivity. This methodology provides an effective way to collect data inputs and generate important reports using it,saving a lot of time and effort..

Training and Development:

Introducing LLMs for training and development methodologies can significantly enhance your business growth, as by providing automated tools that can help in upskilling your employees or student expertise.

Risk Management:

LLMs have the potential to enhance your business by providing a guideline that can analyse the risks that can possibly occur, so that you can prepare your plan ahead of it, ultimately saving you up from facing huge losses.

Innovation and Product Development:

LLMs have the ability to make your business progress,, through its large data training it helps in learning complex patterns for understanding future market trends and needs, and helps in making predictions that can lead to brainstorming of innovative ideas.

Strengths and Limitations of LLM

LLMs hold a strong place as the advantages it offers have proved to be extremely helpful, below we have discussed the strengths and advantages in detail which can help you in providing a better picture:

Strengths:

- High-Quality Text Generation:

One of the biggest advantages that LLMs provide that makes it the top choice is the quality generation that this provides, it helps in providing a more focused content creation and customer service.

- Versatility:

As these LLMs are trained on wide data that enables them to cater diverse problems efficiently, which makes it really crucial.

- Understanding Context:

These LLMs have the ability to capture deep patterns that help in better understanding of the context that is being intended to be communicated.

- Transfer Learning:

LLMs can use pre-trained models which offer organizations to understand complex language context without needing an extensive dataset to train the model from scratch.

Limitations:

- Resource Intensive:

As LLMs are trained on large datasets this makes them expensive, a deployment of such modals requires significantly heavy computational resources, for it to provide the desired output.

- Bias and Ethical Concerns:

LLMs have the tendency to unintentionally learn and tweak their present biases in the training data, which involves ethical and reliability concerns when you are considering deploying it for sensitive applications.

- Limited Understanding:

Although the output generated from LLM might apparently look Intelligent, sometimes they may lack comprehension, as the function is based on pattern of data rather than trying to understand the exact context being intended to deliver, which can possibly lead to misleading outputs.

- Dependence on Data Quality:

LLMs are highly data dependent, so misleading or poor quality data can severely result in delivering undesired outputs.

- Inflexibility to Changes:

One of the concerning limitations of LLMs is that once they are trained on a specific data they cannot adapt well to the new data, so in order to make it updated they have to be retrained on the new dataset, which consumes time and effort.

SLM or LLM: Which one is better for your business?

Whether it's SLM or LLM both have the capability to offer great functionality, but both also offer best performance for some specific use cases. If you are looking to integrate AI for some dedicated small scale tasks for which you require a more focused rapid approach to compute your desired output without it getting computationally expensive, then it's recommended to you to utilize SLM architecture for your business idea.

However, if your business idea demands such an architecture that delivers high quality, and has the ability to adapt to new approaches by fine tuning, and also provide scalability then it's ideal for you to implement LLMs for achieving your expected results. As LLMs have the ability to ensure these qualities, which makes it computationally expensive too.

Future of SLM and LLM in Business World

In the future, there is potential to introduce a hybrid model that incorporates the strengths of both SLM and LLM to deliver the best of both by ensuring the most optimized reliable solution, without compromising on the performance because of the limitation that each of these architectures can possibly cause. So, in future you can see great innovations being made possible at minimalistic cost.

Final words

SLM or LLM both have the potential to revolutionize the landscape of the business world. So, the choice between SLM or LLMs should entirely be made on the resources and idea you want it to deliver for you, while SLM is a preferable choice to implement small scale business requirements within the limited resources, LLMs offer its architecture and resources to manage your computationally heavy idea. Therefore its safe to say that these progress and innovation within the field of AI has excellent prospects to revolutionize your business.

If you are still unsure about which one to move forward to implement your idea? Feel free to reach out to our experienced AI experts at Centrox AI to bring your specific AI based business solution into reality.

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.