Mastering MLOps: Process, Best Practices, Benefits, and Challenges.

Build your understanding about MLOps, its processes, best practices, and challenges, which helps you plan and implement a more intelligent solution.

7/18/2025

machine learning

12 mins

AI, in combination with machine learning, is introducing innovations with each passing day. By pushing the boundaries of both, we are receiving applications covering the advanced needs of various businesses.MLOps here is working as an important component to handle the machine learning development life cycle so that it operates in the expected way.

MLOps helps in providing a set of practices, tools, and processes that streamline and handle the machine learning life cycle. This contributes to handling the process of data preparation, model development, deployment, and eventually monitoring and maintaining the solution in a real business environment to ensure it operates efficiently.

Through our article, we will help walk you through the concept of MLOps by learning what it is, its importance, its process, some of the best practices, benefits, and common challenges that we can encounter during the MLOps process, to help in making a reliable and smart solution for your specific business applications.

What is MLOps?

MLOps, or Machine learning Operations, is the process that handles the functioning of a machine learning model, allowing it to deliver the required performance. It combines machine learning, DevOps, and data engineering to automate and handle the end-to-end machine learning lifecycle. MLOps involves building, testing, deploying, monitoring, and maintenance of ML models to make sure they are meeting the expected performance over time, similar to how software updates in apps and websites.

Importance of MLOps

MLOps is an integral component since it serves as a bridge between machine learning model development and its deployment in real-world scenarios. But some significant contributions that truly make it valuable include: encouraging businesses to upscale ML systems, reducing the time to market, to ensuring a secure, accurate, and reliable model in production. Chip Huyen (Author of “Designing Machine Learning Systems”) clearly mentions:

“MLOps is not optional anymore. It’s a must for any company wanting to scale ML successfully and sustainably.”

This indicates that without MLOps, these ML models might fail to reach or sustain real-world operation because of inconsistent workflow, compromised collaboration, and the absence of monitoring.

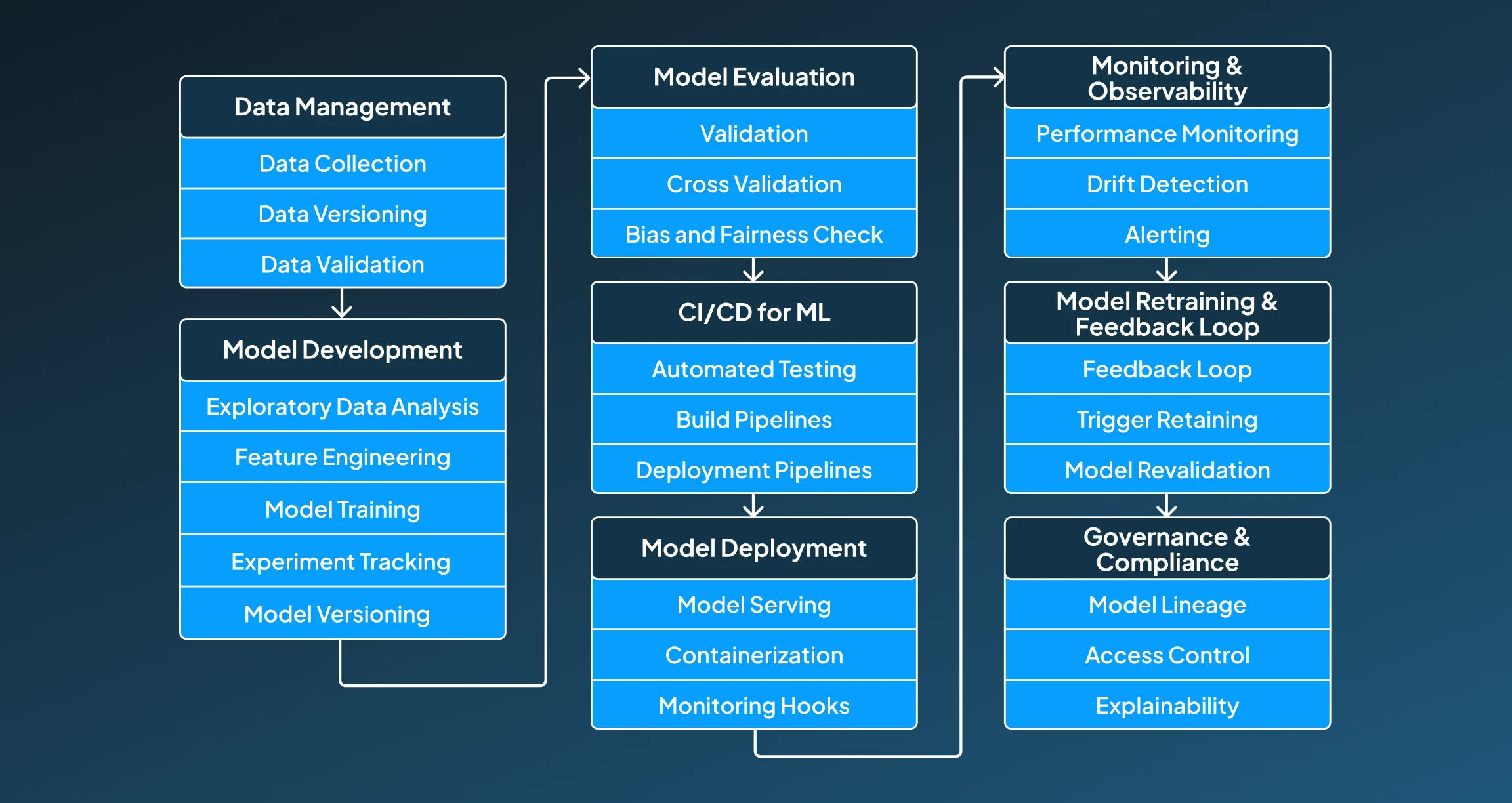

Procedural Breakdown of MLOps

This MLOps delivers the required performance because of the series of processes that work in collaboration behind the scenes. To get a proper insight into MLOps, it's very critical to understand its procedural breakdown. Below, we have explained the procedural breakdown of MLOPs that will help you in building your initial understanding around it:

1. Data Management

Data is the backbone of the machine learning model; therefore, the process of data management holds significant importance in MLOps to ensure the best results. This performance is made possible by the following steps that are undergone in this process, which are mentioned below:

- Data Collection: Gather data from multiple sources (databases, APIs, logs, sensors, etc.).

- Data Versioning: Track changes in datasets using tools like DVC or Delta Lake.

- Data Validation: Ensure data quality using automated checks for schema, nulls, outliers, etc.

2. Model Development

After data management, the next core process involved in MLOps is model development. This process involves a series of steps that include: analysis, feature engineering, training, and versioning, ultimately providing a base model that handles the assigned task.

- Exploratory Data Analysis (EDA): Understand data distributions, patterns, and correlations.

- Feature Engineering: Create meaningful features that improve model performance.

- Model Training: Train models using frameworks like TensorFlow, PyTorch, or Scikit-learn.

- Experiment Tracking: Use tools like MLflow, Weights & Biases to log metrics, parameters, and results.

- Model Versioning: Save and version trained models for reproducibility.

3. Model Evaluation

Once the process of model evaluation is completed, then the prepared model is accessed through the process of model evaluation. This process ensures that the prepared model meets the desired requirements and is free from potential biases. Under this process, the following steps take place:

- Validation: Evaluate model performance using metrics (accuracy, precision, recall, RMSE, etc.).

- Cross-Validation: Ensure model generalizability by testing on multiple data splits.

- Bias & Fairness Checks: Analyze for fairness and mitigate biases.

4. CI/CD for ML (Continuous Integration/Continuous Deployment)

If the prepared model is successful in passing the evaluation benchmarks, then the CI/CD for machine learning works to automate the workflow by handling code and data changes to model testing, packaging, and deployment. It ensures that the prepared ML systems remain reliable, scalable, and consistent with the updates without needing much manual effort.

- Automated Testing: Unit tests for data pipelines, model logic, and performance checks.

- Build Pipelines: Use tools like Jenkins, GitHub Actions, or GitLab CI to automate code and model packaging.

- Deployment Pipelines: Automate deployment using tools like Kubeflow, MLflow, or SageMaker.

5. Model Deployment

The next critical stage in the process of MLOps is around model deployment, which ensures that the developed machine learning model has been integrated appropriately within the respective environment where it's expected to operate. This process exclusively handles the following steps:

- Model Serving: Deploy models as REST APIs or batch prediction services using Flask, FastAPI, or TorchServe.

- Containerization: Use Docker and Kubernetes to manage scalable, portable deployments.

- Monitoring Hooks: Integrate monitoring tools for logging, latency, errors, and drift.

6. Monitoring & Observability

After deployment, the monitoring and observability of the developed and deployed ML system are very critical, as it ensures that the ML system remains consistent with requirements, also providing regular alerts to inform about fluctuations in behavior or performance.

- Performance Monitoring: Track live model metrics (e.g., accuracy, latency).

- Drift Detection: Identify when input data or predictions deviate from training data.

- Alerting: Set up alerts for sudden changes in behavior or performance.

7. Model Retraining & Feedback Loop

Having a feedback loop in the MLOps process allows a mechanism for improving the developed ML systems. This whole procedure of model retraining has the following steps to help the ML system achieve its ultimate performance goal:

- Feedback Loop: Use production data or user feedback to improve models.

- Trigger Retraining: Automate retraining based on data drift or performance drop.

- Model Revalidation: Evaluate the updated model before redeploying.

8. Governance & Compliance

Last but the most important step is to ensure that the prepared ML system complies with policies and regulations. Therefore, through this final process, we perform the following steps:

- Model Lineage: Track every step of the ML lifecycle for auditability.

- Access Control: Restrict model access using role-based permissions.

- Explainability: Use tools like SHAP or LIME to explain model decisions for regulators or users.

Best Practices for MLOps

It's very important to prepare an ML solution that is ahead of time, and should be scalable too, while maintaining the reliability and performance. Therefore, you must know about some best practices for MLOps so that you can plan and implement a smarter solution.

1. Automate the Entire ML Lifecycle

By using CI/CD pipelines, we can automate the entire ML lifecycle from model training, testing, deployment, and monitoring, which reduces manual steps for minimizing errors and increasing repeatability.

2. Version Everything

Another important aspect that can contribute to ensuring better performance is versioning datasets, code, models, and configurations. By using tools like Git, DVC, and MLflow, we can track changes and enable smooth rollback.

3. Keep Development and Production Environments Consistent

Using containerization tools like Docker can help ensure consistency across environments. This consistency can be provided by defining environments with the help of tools like Conda or virtualenv.

4. Monitor Models in Production

Tracking the real-time performance for metrics like accuracy, latency, and error rates helps in ensuring that the performance remains consistent. By using drift detection, we can find out when a models degrade over time.

5. Establish Clear Retraining Triggers

Defining thresholds like data drift and low accuracy for a model so that it can be retrained is very critical. Also, automating the retraining using workflows like Airflow, Kubeflow, or SageMaker Pipelines can prove to be very impactful for the ML system.

6. Ensure Reproducibility

Ensuring reproducibility by logging all hyperparameters, code versions, dataset versions, and training outcomes can have a far-reaching impact. This can be done by experiment tracking tools like Weights & Biases, MLflow, or Comet.

7. Test Extensively

One important point that can surely help in making your ML solution perform well is by writing unit tests for data preprocessing, feature engineering, and model logic, and including integration and regression tests in ML pipelines.

8. Implement Robust Data Validation

Also, by validating schemas, data types, null values, ranges, and distributions before using them in pipelines, we can have resilient data validations. For performing this responsibility, tools like Great Expectations and TensorFlow Data Validation can be used.

9. Collaborate Across Teams

Creating workflows that support collaboration between data scientists, ML engineers, and DevOps is considered one of the best practices for MLOps that aids in the preparation of a solution, aligning well with expectations.

10. Focus on Governance and Compliance

Another great practice for MLOps is ensuring models that are explainable and meet regulatory requirements (e.g., GDPR, HIPAA).

11. Design for Scalability

It's a really important practice for MLOps processes to build pipelines that scale with data volume and model complexity. For this purpose, distributed training and inference can be used.

12. Use Modular and Reusable Components

Also, if we break pipelines into modular components, then they can be reused across ML system projects. By following software engineering best practices like DRY (Don't Repeat Yourself), we can have a smarter and more futuristic system.

Benefits of MLOps

MLOps offers great benefits for making your ML system more reliable, scalable, and smarter. For your help, we have listed some of the most prominent benefits that this MLOps implementation extends:

1. Faster Model Deployment

MLOps provides an approach to automate the ML lifecycle, reducing time from model development to deployment. This enables continuous integration and delivery (CI/CD) for ML systems.

2. Reproducibility

With MLOps, we get models, data, and experiments that can be consistently reproduced using versioning and tracking. This can be extremely critical for debugging, auditing, and compliance. (Shashi et al., 2022)

3. Scalability

MLOPs contribute to making it easier to scale ML models across multiple teams, data pipelines, and environments. This supports large-scale deployment, retraining, and monitoring of models.

4. Enhanced Monitoring and Reliability

MLOps plays its role in continuously tracking model performance, drift, and system health in production, which prevents issues like accuracy drop or biased predictions from going unnoticed.

5. Automated Retraining and Updates

Detects performance drops and triggers automated retraining or model replacement. This contributes to keeping models fresh and aligned with real-world data.

6. Improved Collaboration

MLOps helps in bridging the gaps between data scientists, ML engineers, and operations teams with shared workflows and tools, eventually reducing handoff friction and promoting seamless teamwork.

Common Challenges for MLOps

Although MLOps extends a performance-driven framework for deployment and management of machine learning models at scale, its implementation does hold its particular challenges. These challenges could be faced because of the complex nature of ML systems. Below are some of the most common hurdles faced during MLOPs adoption and operations:

1. Data Quality and Management Issues

Data quality mainly influences MLOps performance; if the data is Inconsistent, incomplete, or unstructured, then this compromises model efficiency. Also, management of versioned datasets and data pipelines is often more complex than managing code.

2. Model Drift and Degradation

With the passage of time, models can start providing less accurate outputs due to changes in input data or user behaviour. Therefore, these models require continuous monitoring and retraining.

3. Integration with CI/CD Pipelines

The complete integration of ML into CI/CD pipelines requires these conventional CI pipelines to handle demands like training, validation, data handling, and model packaging. (Satvik et al., 2024)

4. Model Deployment Complexity

Models working for real-time or batch mode scenarios need to make complex infrastructural decisions. Selecting the right option between cloud, on-premises, edge, or hybrid deployment adds another challenge for MLOps.

5. Monitoring and Observability

Monitoring the performance metrics, data drift, and system anomalies holds great significance, and it is actually complex for ML than traditional solutions. Here lack of visibility can lead to unnoticed failures or bias in the production phase. (Janis et al., 2020)

6. Compliance and Governance

Another huge challenge is to maintain compliance of the model with transparency, fairness, and auditability standards framed by the relevant authorities. Also, management of lineage, explainability, and data privacy can be difficult without proper tools.

7. Cross-Team Collaboration

MLOps involves data scientists, ML engineers, software developers, and DevOps teams. Misaligned goals or undefined roles can create communication gaps and delays, disrupting the performance of the ML system.

8. Tooling Overload and Fragmentation

A wide variety of tools exist for every stage (training, tracking, serving, monitoring), often without seamless integration. Choosing the right stack and avoiding vendor lock-in is a common concern.

Operationalize ML with Confidence

In today's evolving time, MLOps is no longer a luxury; rather, it is transitioning itself into a necessity for organizations that want to operationalize machine learning at scale. This helps in providing a standardized process that encourages smooth collaboration across teams, alongside ensuring continuous delivery of trustworthy models, ultimately transforming experimental ML projects into production-ready solutions.

However, to truly master MLOps, teams must balance automation with governance, adopt the right tools strategically, and overcome challenges like data quality, model drift, and integration complexity.

By following industry-proven best practices and understanding both the benefits and potential pitfalls, organizations can unlock the full potential of their ML initiatives. In a rapidly evolving AI-driven world, MLOps is the key to staying agile, compliant, and competitive.

Do you want to exercise the benefits of MLOps by implementing it for your business solution? Discuss the possibilities with our experts at Centrox AI, and take your first step towards having smarter business operations.

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.