Gen AI in Internal Audits: When Generative Models Learn Enterprise Risk

Learn how GenAI improves internal audit, its workflow, top models, benefits, and challenges, to boost accuracy, speed, and audit efficiency.

10/6/2025

artificial intelligence

10 mins

Performing internal audits, especially in large-scale industries and organizations, is a complex and critical task. Additionally, missing out on any important aspect can eventually compromise the audit effort, leading to faulty or inaccurate reporting. This small negligence could be the reason behind losing out on credibility and market autonomy. Generative AI in internal auditing can emerge as a single tool assisting various auditing tasks to identify the gaps and suggest the right direction.

Audit holds huge importance for businesses, regardless of their scale, as it plays a key role in their financial, operational, and risk planning. A faulty audit results in weak planning, affecting overall workflows and growth. Traditional audit practices not only come with loopholes, but it are also inefficient and consume a lot of resources. A generative AI-powered auditor performs duties objectively and ensures reports accurately meet all requirements.

With our blog, we will help you understand whether it is worth implementing generative AI in internal audits, how it is possible, which models can best support it, its benefits, and challenges. This discussion will help you understand and execute a well-planned Gen AI solution for your internal audit workflows.

How is the Traditional Auditing System Inefficient?

Although traditional internal auditing systems have been used for a long ago and are still in practice. But, they sometimes result in producing faults and errors, resulting in serious damages both in financial and credibility terms. These loopholes in traditional auditing not only compromise productivity and loss of millions of dollars, but also add delay in determining the root cause of inefficiency. Some of the major issues that businesses go through while executing traditional auditing are mentioned below:

1. Human Bias

Conventional auditing practices overly rely on human judgment, which can have personal biases involved based on relationships, departmental pressure, or internal politics. This might result in overlooking some serious issues, favoring specific teams, or making subjective decisions rather than making logical, just assessments.

2. Limited Data Coverage

These traditional auditors usually make assessments on samples of data instead of going through the entire data because of time and resource limitations. This practice might sometimes result in missed pattern identifications for irregular transactions or fraud, leading to drawing incomplete or inaccurate conclusions.

3. Manual Processes

The manual process for auditing requires auditors to perform tasks like document checks, paperwork, and physical verification repeatedly. This makes the whole audit cycle slow and inefficient, which results in delaying reporting and ultimately delays the response to the emerging issue.

4. High Error Risk

Since manual work depends entirely on humans' perception, interpretation, and execution, the slightest mishandling or misunderstanding can compromise the accuracy and credibility of the entire report. Since this doesn't even hold real-time monitoring to evaluate misreporting and correct it accordingly, it results in wasting time and effort on the misrepresented information.

Why do Businesses Need Generative AI in Internal Audits?

Generative AI can transform the internal audit process as it offers continuous, reduced-bias bias full-data analysis, enabling real-time anomaly detection and automated reporting. This scales across systems and adapts to evolving processes, uncovering patterns that humans often miss or are unable to identify. As Kai-Fu Lee says:

“AI will do the analytical thinking, while humans will wrap that analysis in warmth and compassion.”

With these efforts, generative AI can power tools to enable intelligent internal audit in real time. Such tools would be smart enough to ensure a self-improving assurance engine and would be driving improved efficiency, accuracy, and proactive compliance.

Gen AI in Internal Audits

Generative AI in internal audit utilizes large language models and advanced pattern identification to automate full dataset analysis. These Gen AI model holds the ability to detect anomalies and generate compliance-ready reports. By handling such responsibilities, it can allow enterprises, industries, and businesses to execute auditing as a step of their daily, weekly, or monthly closing. They extend a self-learning intelligent system, enabling predictive risk management, real-time assurance, and objective, bias-free decision-making.

Which Gen AI models can perform an internal audit?

Recently introduced Gen AI models have shown advanced abilities that can assist the audit workflows. These models demonstrate improved accuracy, anomaly detection, intelligent adaptation, and continuous learning to ensure that the working model delivers credible reports. Below, we have listed a few Gen AI models and their specialized use cases, so that you can understand which model is more suitable for your business's auditing workflows:

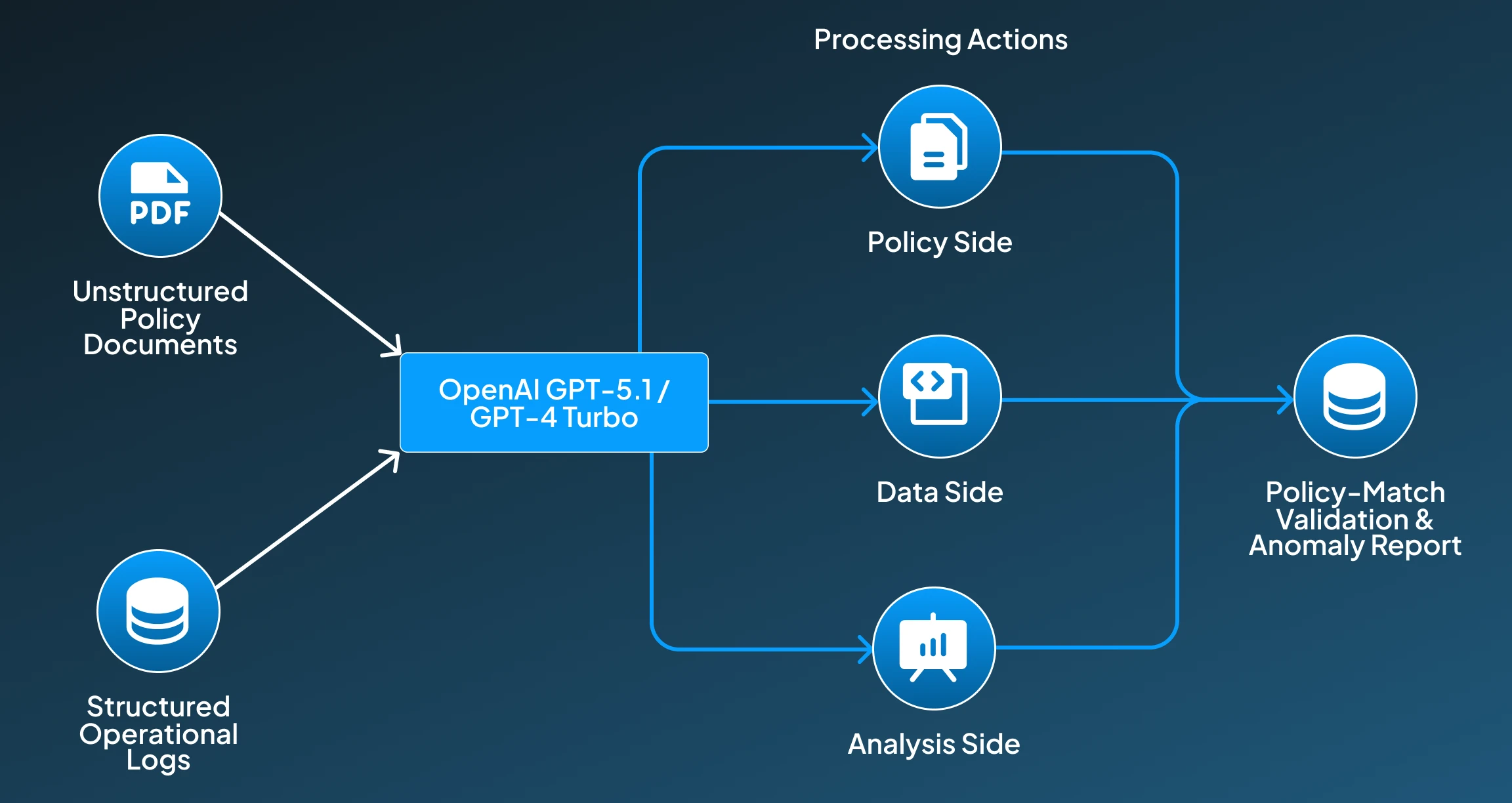

1. OpenAI GPT-5.1 / GPT-4 Turbo

- How it works: OpenAI’s GPT-5.1 or GPT-4 turbo has exhibited reliable performance for autoregressive reasoning tasks, which is very significant for internal audit responsibilities. It has a transformer-based autoregressive reasoning, extended context buffers, and function calling to parse policy PDFs via token-level semantic extraction. This converts rules into structured JSON logic and queries operational logs via tool integration. It also executes delta analysis using embedding similarity + reasoning chains to ensure policy-match validation through vectorized comparisons

- Benefit: With this ability, this GPT-5.1 / GPT-4 turbo extends multi-step reasoning and powerful semantic grounding, which enables precise alignments with the standards and policies.

- Application: This GPT-5.1 / GPT-4 can ensure impactful performance for policy to process compliance automation in auditing workflows. Through its efficient reasoning abilities, it can enhance anomaly identification and reduce mishandling.

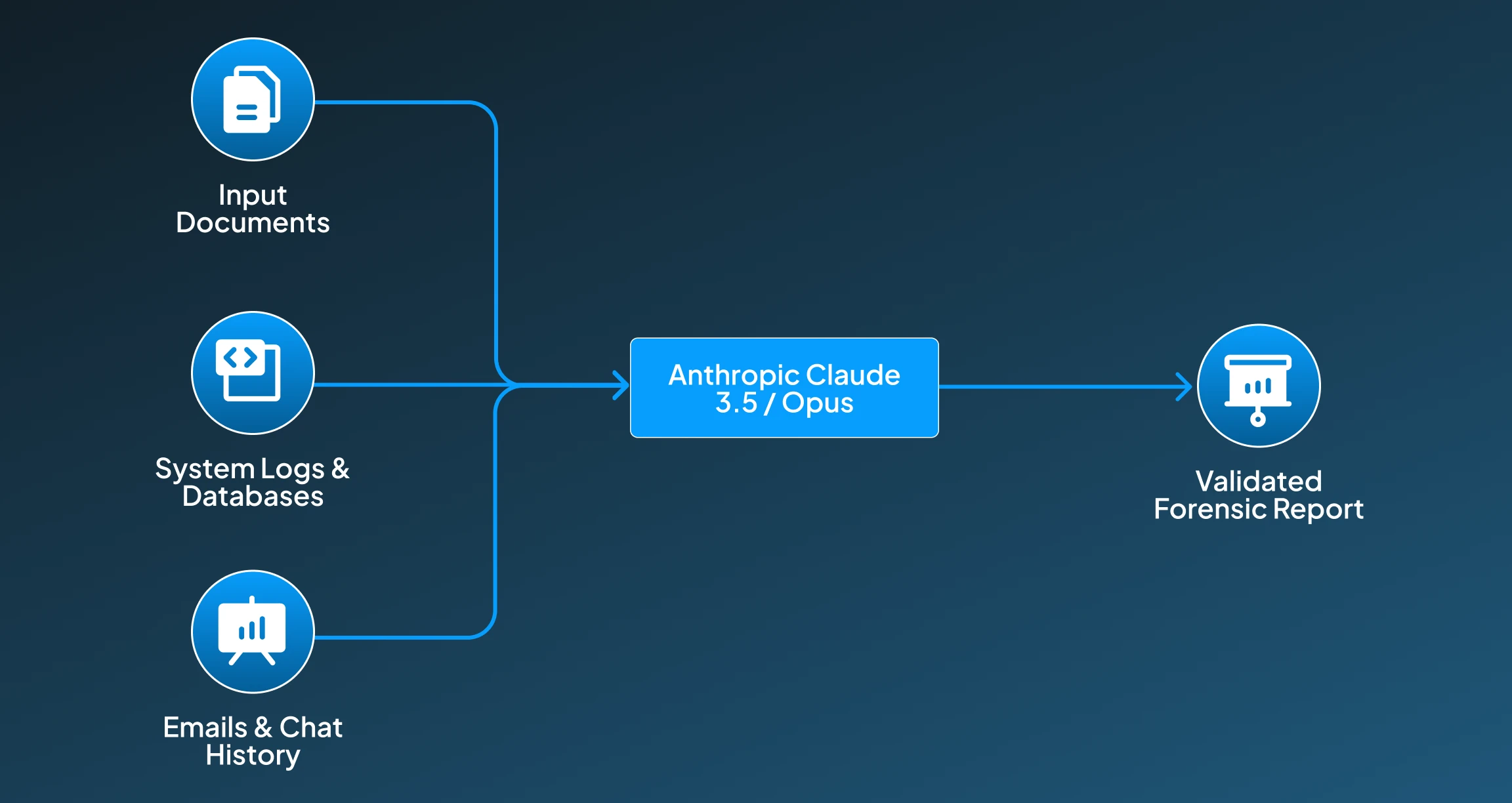

2. Anthropic Claude 3.5 / Opus

- How it works: Anthropic Claude 3.5 is another essential Generative AI model that can be helpful for internal auditing tasks. This model is built with Constitutional AI and long context (up to millions of tokens), which enables it to load entire audit trails into memory. By implementing this, it can execute document graph reconstruction across multiple evidence sources and utilize hierarchical summarization to generate structured narratives while maintaining accuracy via context attention routing. This validates claims with embedded citation mapping.

- Benefit: This Claude model allows the environment where they are deployed to maintain coherence over massive evidence sets, which makes it ideal for audit forensics.

- Application: This Claude model can show excellent performance for regulator-ready audit report generation, since it looks through the entire audit trails and generates contextually accurate and validated summarized outcomes.

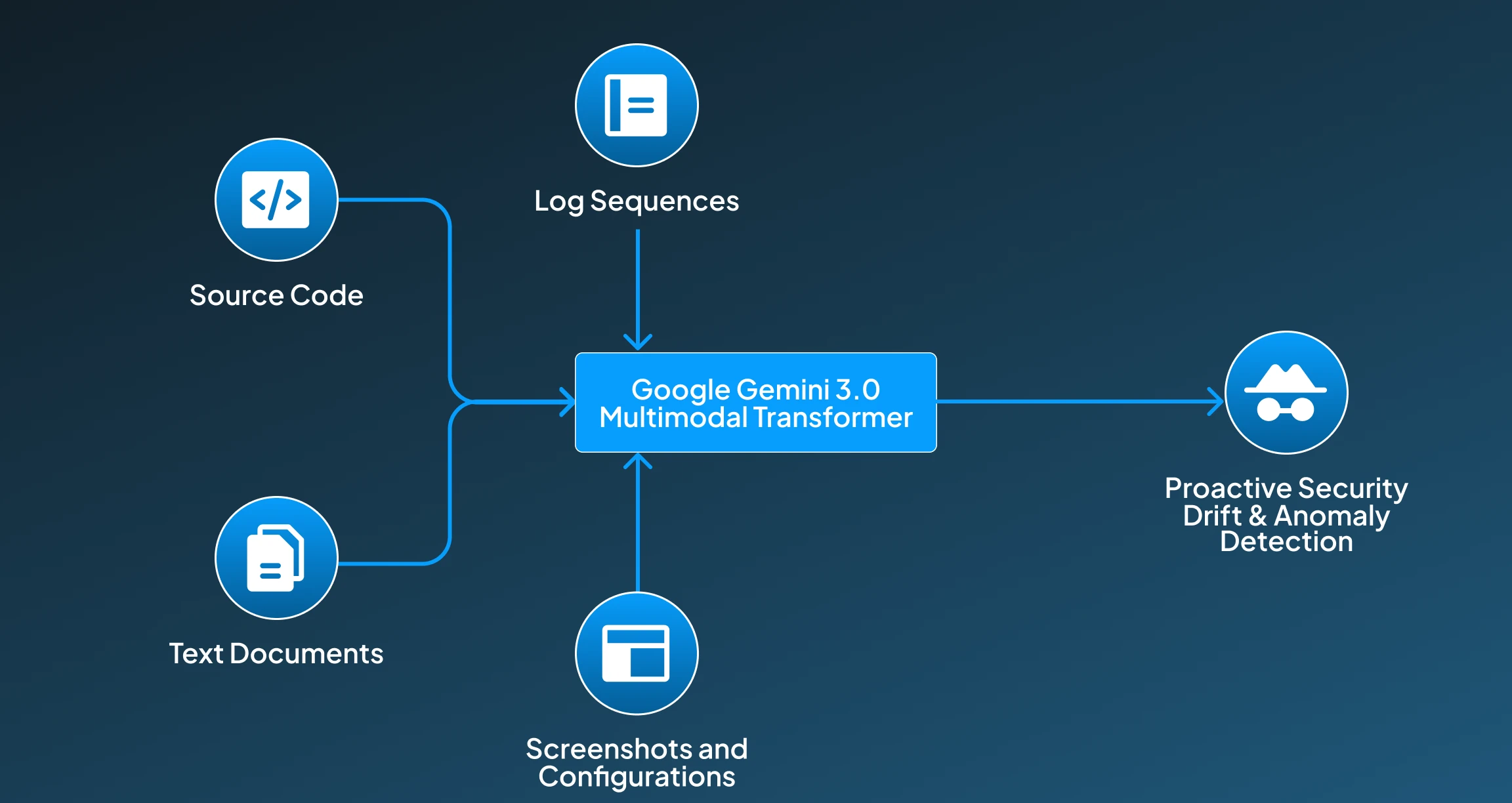

3. Google Gemini 3.0

- How it works: Google Gemini 3.0 is a multimodal transformer with a joint embedding space for text, code, logs, and screenshots. It functions by parsing log sequences using temporal pattern modeling. Furthermore, it runs static/dynamic analysis on code through its intelligent code-understanding modules. Through vector-space anomaly detection, it identifies security drifts and processes configs/screenshots using vision-language fusion layers.

- Benefit: This Gemini 3.0 Ultra can manage the cross-modal data pipelines, which play a very critical role for IT and cloud security audits, because of its smart ability to run analysis and spot anomalies.

- Application: This Gemini 3.0 Ultra can be deployed for automated cybersecurity and infrastructure audits. This model can proactively find out drifts and execute processes using vision language models.

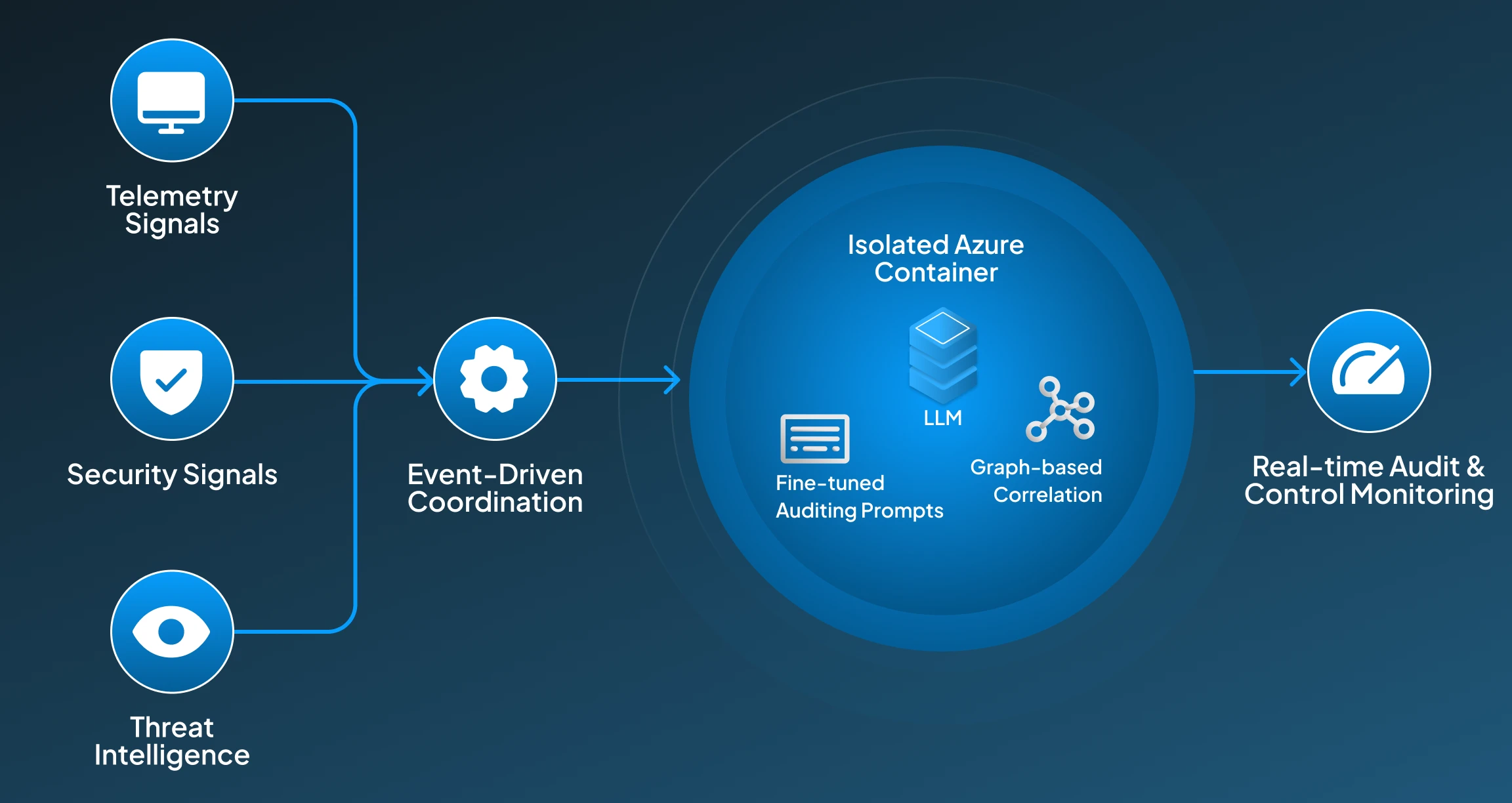

4. Microsoft Azure OpenAI (Enterprise-Tuned LLMs)

- How it works: Microsoft Azure includes LLM inference with Azure-native telemetry. This generative AI model works by processing real-time input signals from Azure Monitor, Defender, and Sentinel. Then it utilizes even-driven coordination to inspect every new transaction. After this, it runs model predictions inside isolated Azure containers for security. It also applies fine-tuned auditing prompts via Azure Prompt Flow and uses graph-based correlation of actors, resources, and events.

- Why it works: By integrating Microsoft Azure, businesses and enterprises can have native access to their logs, enabling precise, real-time internal auditing for their required workflows.

- Application: Microsoft Azure can be very efficient for handling audit workflow, especially for monitoring controls. As this has great potential for understanding the Azure input signals and making predictions to ensure secure and monitored control.

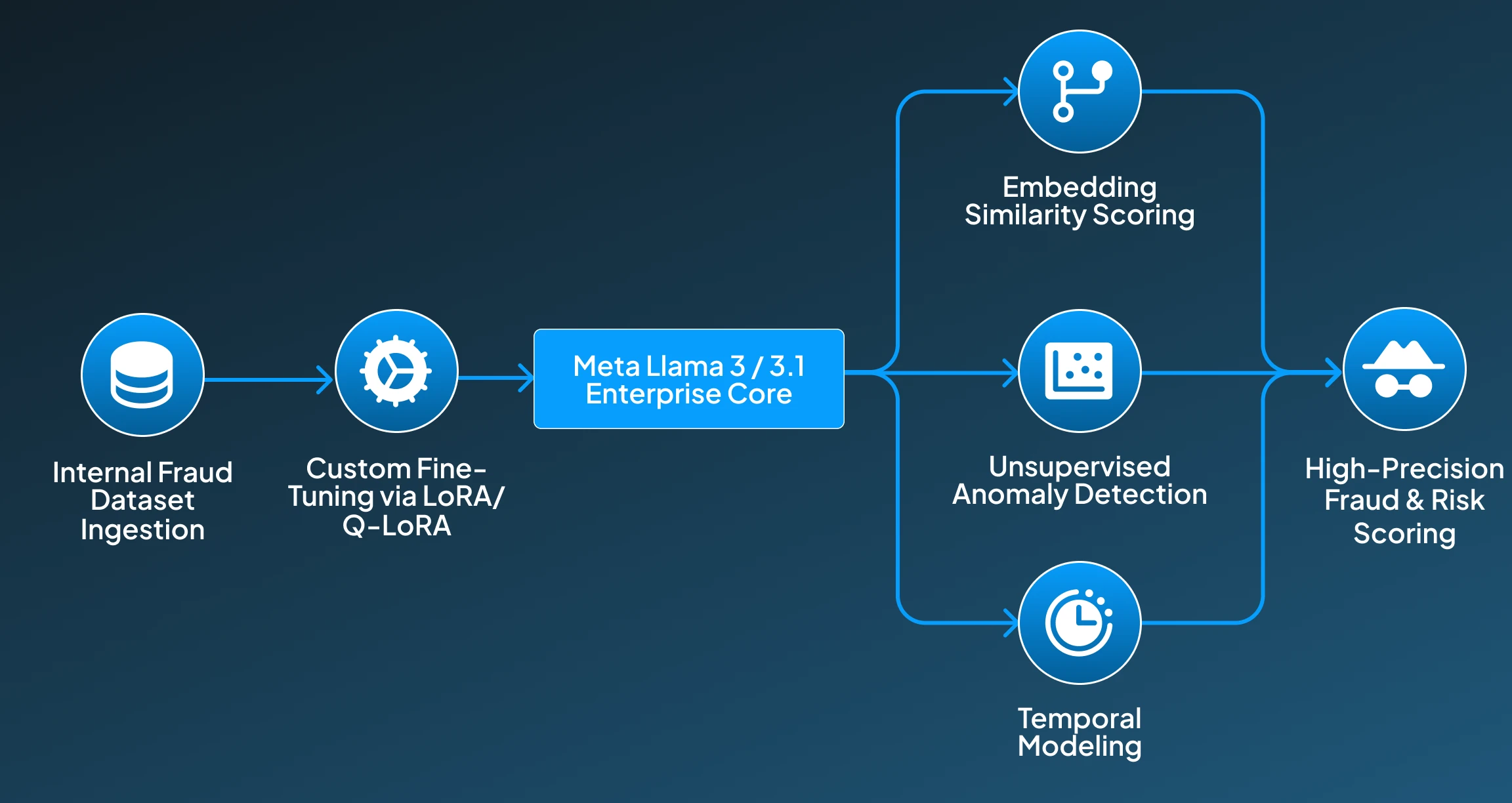

5. Meta Llama 3 / Llama 3.1 Enterprise

- How it works: Meta Llama 3 / Llama 3.1 is another important Gen AI model that can drive internal audit applications. With its open-weight architecture for deep customization, which is fine-tuned on an internal fraud dataset using LoRA/Q-LoRA it learns fraud signatures through embedding similarity scoring. This recognizes outliers through unsupervised anomaly detection heads and then applies temporal modeling to identify behavioral drifts. Additionally, it implements internal vectors to ensure high-precision fraud scoring.

- Benefits: This Meta Llama 3 / Llama 3.1 has Custom fine-tuning, which unlocks audit specialization impossible with closed models, and ultimately assists in recognizing behavioural inconsistency and suspiciousness.

- Application: For a use case like fraud pattern detection and risk scoring, this Meta Llama 3 / Llama 3.1 can prove to be very empowering, because of its advanced ability to learn fraudulent signatures and similarity scoring, and thus significantly reduces fraud in business workflows.

Challenges of Implementing Gen AI for Internal Audits

Despite all these advancemnets and conveniences, the integration of Gen AI in the internal audit workflow still holds a few challenges. These challenges might result in computing-compromised internal audit output, significantly impacting the enterprise's workflows and eventually the credibility. Here we have mentioned a few challenges that businesses should address before implementing a Gen AI solution for their auditing duties:

1. Data Fragmentation Across Legacy Systems

Gen AI model needs unified data; on the other hand, the audit data is spread across different ERPs (SAP, Oracle), cloud logs (AWS/Azure), HRIS, CRM, and workflow tools. This makes the data schemas inconsistent, and logs having different structures, whereas for real-time insights, it needs ETL pipelines and & vector databases. Here, a missing metadata could break audit traceability.

2. Model Explainability for Audit-Grade Transparency

One of the major concerns from these famous Gen AI models is hallucination and generating plausible but incorrect audit findings. As these LLMs are probabilistic, not deterministic, so lack of grounding can result in false conclusions. Therefore, it needs RAG pipelines with document-level citations and audit-specific validation layers to reduce fabricated controls or mismatched evidence.

3. Secure Access to Sensitive Financial Data

Internal audits involve privileged datasets. Ensuring zero leakage requires secure model hosting, role-based access control, encryption-in-use, and isolated inference environments, far more complex than standard AI deployments.

4. Integration with Enterprise Risk & Compliance Systems

Gen AI must plug into GRC platforms, workflow engines, and custom enterprise logic. This demands API orchestration, agent frameworks, retrieval pipelines, and event-driven architectures to ensure AI outputs trigger compliant audit workflows.

5. Hallucination Control for Regulatory Accuracy

Even top models may generate confident but incorrect conclusions. For audit use, hallucinations are unacceptable. Requires retrieval-augmented generation (RAG), validation agents, rule-based guardrails, and deterministic verification layers to guarantee accuracy.

Redefining Internal Audits with Generative AI

Generative AI is rapidly reshaping internal audits into intelligent, continuous, and autonomous systems that elevate accuracy and eliminate bias. Its ability to interpret unstructured data, surface hidden risks, and generate compliant outputs makes it a foundational pillar for future-ready enterprises.

But adoption demands technical maturity-resilient data pipelines, interpretability frameworks, and strong hallucination controls. The real breakthrough comes from treating audits not as static checklists but as evolving, self-correcting systems.

Leaders who think in terms of end-to-end automation, scalable intelligence, and disciplined deployment will unlock a new audit paradigm, one where transparency, trust, and machine reasoning operate at enterprise scale.What if your internal audit could anticipate risks before they happen? Would your organization be ready to trust AI with this power? Discuss it with our expert engineers at Centrox AI and explore the future of intelligent auditing

Muhammad Harris

Muhammad Harris, CTO of Centrox AI, is a visionary leader in AI and ML with 25+ impactful solutions across health, finance, computer vision, and more. Committed to ethical and safe AI, he drives innovation by optimizing technologies for quality.

Do you have an AI idea? Let's Discover the Possibilities Together. From Idea to Innovation; Bring Your AI solution to Life with Us!

Your AI Dream, Our Mission

Partner with Us to Bridge the Gap Between Innovation and Reality.